AIX Development: How Threads Really Compete for Power

ATS Group's Justin Bleistein continues his AIX Development series, debunking the illusion of simultaneous processes and explaining how AIX manages CPU usage through sophisticated scheduling

From the time I started working with AIX on IBM RISC/6000 systems, I have been captivated by operating systems. Their internal algorithms, hardware interaction and software interface are intriguing. It all seemed like magic to me when I was new to the field. However, as I got more involved in performance tuning AIX, I gained a deeper understanding of the complexity and sophistication of operating systems. Performance tuning heightened my curiosity, especially in understanding how AIX prioritizes programs for execution. I discovered that it was far more intricate than I initially thought.

The OS must make numerous decisions based on specific criteria and apply various algorithms, considering programs requested by different users on the same system. In this article, I aim to explore the specifics of how AIX allocates CPU usage to various programs. To clarify, I am not affiliated with IBM and don’t have access to the AIX source code. My insights are purely based on my experiences and knowledge gathered over the years.

The Illusions of Internal OS Mechanisms

In my studies of operating system internals, I couldn’t help but conclude that the OS was, in some ways, deceiving me. Two of these deceptions are at the core of the way an OS works. One is the view that more processes can run on your system than you have CPU cores. The other is that you have more real memory than you have. However, in this article, we are primarily focused on the CPU “lie.”

Now, I’ll correct my terminology before going further. I’ll replace the word “deception” with “illusion” and omit the term “lie.” “Illusion” seems more apt considering the context, although we will revisit the term later in the article. Computers are not lying to you; they have enough CPUs to run your system’s programs. I understand why I initially thought this was a lie; I knew a CPU could only run one process at a time. The OS seemingly creates the illusion that a CPU can run more than one program simultaneously and that the system is running more programs than available CPUs. At least, that is what it looks like to us when working with the system.

That is what this article is about. How does a computer program get executed on the processor? How does a program get “selected” to run on a computer processor? The answer to those two questions will hopefully help to clarify the perception of the two illusions.

Programs Running on the CPU

First, let’s clarify what a computer program is. A computer program is code that forms an application or software used on a system. When a program runs, its thread(s) executes on the processor or CPU. A thread is the smallest unit of execution that can be submitted to the CPU. All programs have at least one thread, and many can be written with multiple threads, allowing the program to perform several tasks simultaneously, especially on systems with multiple CPUs.

For example, I once wanted a budgeting program I wrote in C to download a spreadsheet while simultaneously performing calculations for that spreadsheet. This allowed the spreadsheet to be ready for the data as soon as the calculations were done, ultimately saving time. This is an example where multiple threads can run on separate CPUs, performing different tasks simultaneously.

To illustrate using a non-IT example, imagine my wife and I preparing to take our kids out. I view our combined efforts as two threads working together. I act as one thread, helping my oldest son put on his shoes, while my wife is the other thread, packing their snacks. This analogy reflects the idea of doing things in parallel to achieve our goal more efficiently. The process here involves getting the kids out the door and running two threads. Throughout this article, I will use the terms process and thread interchangeably.

If you are on an AIX LPAR and type in the UNIX command ps -ef, you will see a list of what looks to be “running” processes for that OS. What does this mean? Are all these processes running at once? How can that be? You may be thinking, if a CPU can run one program at a time and I only have two CPU cores (or one for that matter), shouldn’t I only see at most two lines in the ps -ef command output? This command does, after all, display processes running on the system. So why do I see so many?

If you have no third-party software like databases or applications running on your LPAR, you will always see about 30-50 processes running on even the idlest of AIX OS. These processes include /etc/init, /usr/sbin/syncd, /usr/lib/errdemon, /usr/sbin/srcmstr, /usr/sbin/inet, /usr/sbin/syslogd, and /usr/sbin/cron, etc. These are purely user processes, and this doesn’t account for kernel processes, which can only be seen via the ps -k command. Most of these processes are called daemons, which run in the background, waiting to do something or listening on an opened port. These services come with AIX and will start automatically on AIX boot.

In future articles, we will explore user vs. kernel processes further. There are quite a few interesting kernel processes. One is called wait. This process accumulates time on the CPU, and when that CPU is idle, it always must do something. Every CPU has a wait kernel process that will run on idle CPUs, which sounds counterintuitive.

Let’s consider an example LPAR configuration with four virtual processors assigned to it, running in SMT 8 mode, or Hyperthreads, as they are known on the Intel platform. This means there will be eight logical processors for each virtual processor, so the ps -k command will show 32 wait processes for this LPAR. (SMT stands for Simultaneous Multi-Threading; we will cover this in more detail in a future article.)

If we have, by default, 30-50 user processes and 20 or so kernel processes, how can they all run on an LPAR that has only eight CPUs? Just in case your mind was headed down the SMT hallway, having 32 logical SMT CPUs does not mean you can run 32 more processes on the system simultaneously, so forget about that. Even if you could, the math still doesn’t work; there are way more processes (user and kernel) than CPU cores.

So what is going on here? If the ps -ef and ps -k commands show what is running in user and kernel, respectively, for all processes running on the system, then it has to be lying to me or, at best, creating an illusion. No, it’s not lying to you or creating an illusion. What’s making this possible is a very sophisticated mechanism known as the CPU scheduler.

When a program runs, it just doesn’t hop on the CPU and run until completion. The program often switches the CPU on and off during its run based on complex algorithms implemented by the OS CPU scheduler. Technically, the program’s threads are switched on and off the CPU. This happens very quickly.

The AIX CPU Scheduler Mechanism

All operating systems, including AIX, Linux and Windows, have scheduling mechanisms. While AIX has its unique CPU Scheduler, it is not proprietary. However, AIX’s CPU scheduler differs from scheduling tools like Cron, which runs programs at specific times or intervals. The CPU scheduler comes into play only after a program is invoked, manually or by a scheduling tool like Cron. Its role is to manage threads, ensuring they are dispatched to the CPU and controlling their execution.

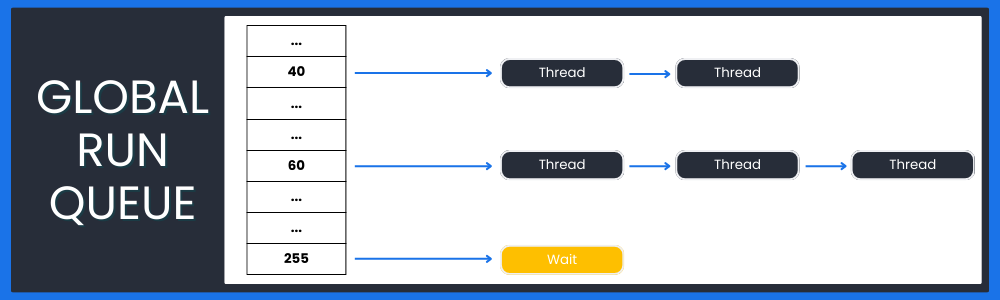

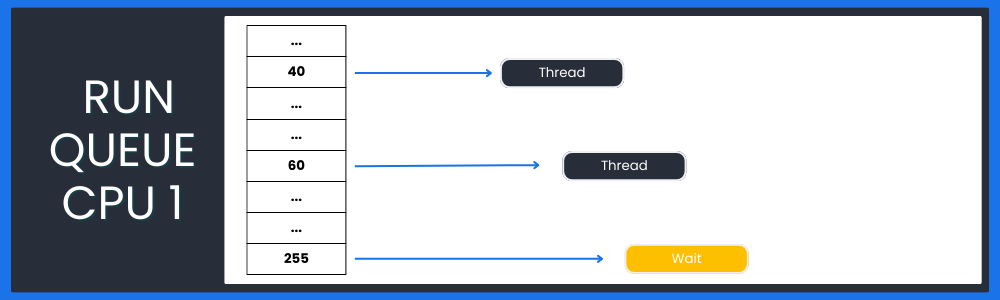

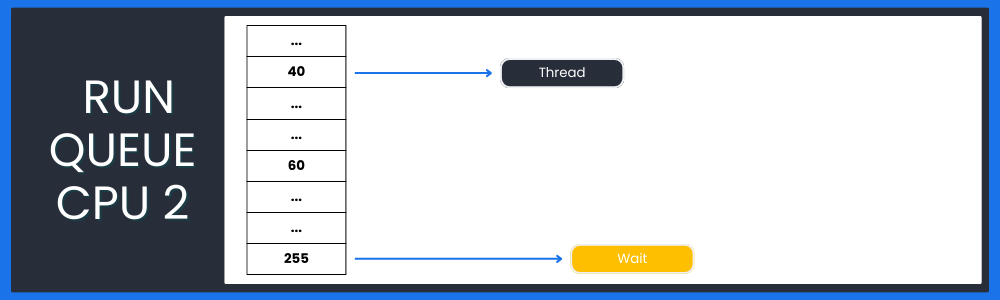

The core structure of the AIX CPU scheduler is the process run queue, where AIX maintains a list of runnable threads organized by priority, awaiting selection to execute on the CPU. These threads are assigned one of 256 priorities, with each priority corresponding to a position in the run queue. AIX uses both global and individual run queues for each CPU. This structure helps avoid lock contention, as a single run queue shared by all CPUs would require obtaining a lock every time a thread is added or removed. Each run queue is essentially a set of doubly linked lists, one for each thread priority.

The CPU scheduler is implemented at the kernel level, where key kernel processes such as sched and swapper play vital roles. The sched process recalculates thread priorities, while the swapper process handles tasks like removing zombie process table entries. You can observe these kernel processes using the ps -k command. Together, they manage thread scheduling according to the system’s scheduling policy. In AIX, the default policy is called SCHED_OTHER.

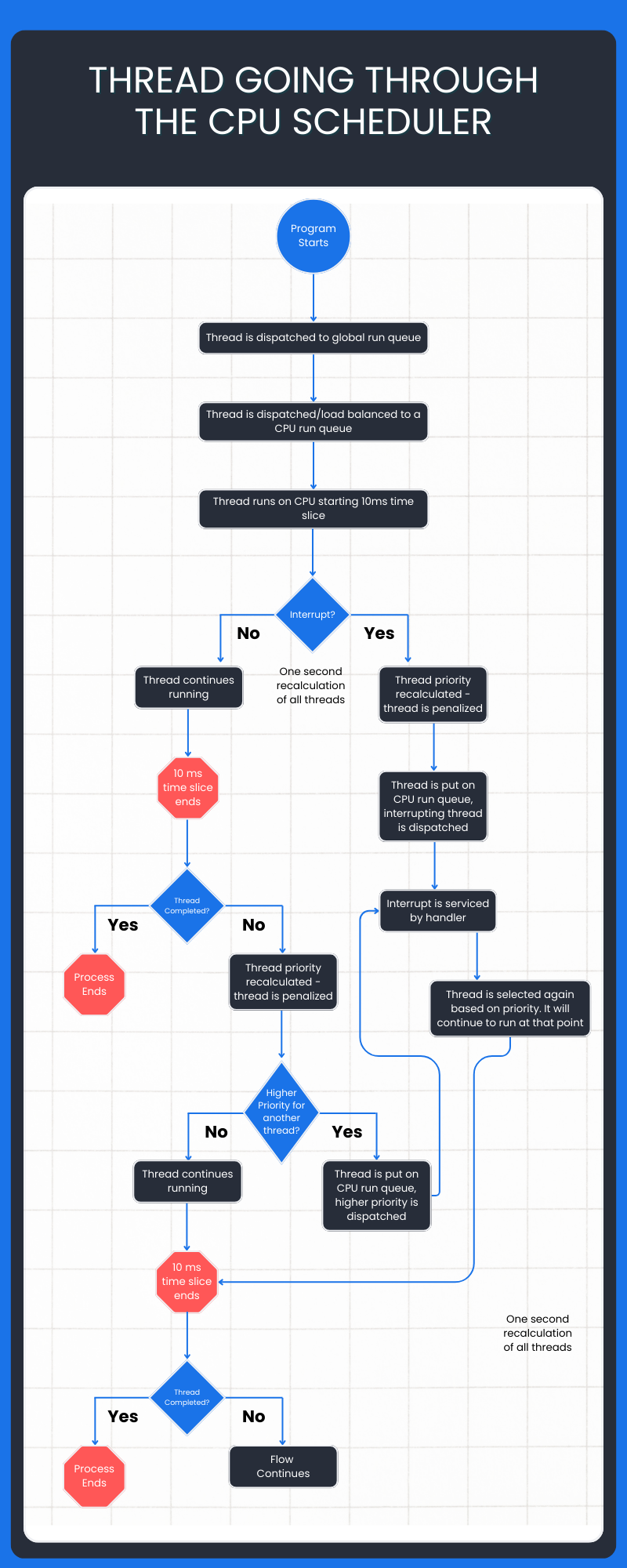

One common misconception about CPU scheduling is that the operating system shows more processes running than the number of available CPUs. This isn’t an illusion; all processes take turns using the CPU. This switching happens incredibly fast, typically every 10 milliseconds (1/100 of a second), meaning that threads are rotated about 100 times per second. A thread’s time on the CPU is called a “time slice” or “quantum.” However, this time slice isn’t guaranteed, as the kernel can preempt threads for various reasons. For example, an external interrupt, such as a hardware event, may force a thread to relinquish the CPU. Similarly, an internal interrupt, such as an I/O call, can cause a thread to give up the CPU voluntarily.

Once a thread gives up the CPU, it returns to the run queue to await its next turn. If the thread is preempted, it will return to the same CPU’s run queue by default, maintaining processor affinity, also known as soft affinity. In cases where threads must be rebalanced across CPUs, AIX’s load-balancing mechanisms distribute them evenly. After CPU relinquishment, a system variable (RT_GRQ) can also be set to place threads on the global run queue.

Every thread in AIX is assigned an initial priority, with lower numbers indicating higher priority. For example, the default priority for a thread is 40, and AIX typically adds a nice value of 20, resulting in a final priority of 60. The nice value helps determine a thread’s scheduling priority. You can use the nice command to adjust the priority of processes, allowing for finer control over their execution.

Threads with higher priority values, such as the wait thread, have less chance of being selected to run. With a priority of 255 (the lowest), the wait thread is always ready to run, but it will only execute when no other thread is available. Compared to a busy system, you’ll see more accumulated time for the wait process in the ps -k output on idle systems.

As threads are preempted and reintroduced to the run queues, the system continuously balances and schedules them, creating the perception of seamless multitasking. However, in reality, threads constantly compete for time on the CPU, and this scheduling happens much faster than the human eye perceives. For example, a process like importing data into an Oracle database might run for two hours, but it won’t be occupying the CPU for the entire duration. The process is likely preempted thousands of times, particularly when making I/O calls to the disk.

Understanding AIX’s scheduling mechanisms clarifies how threads interact with the CPU and how the system handles multitasking. This knowledge is essential for tuning system performance and ensuring efficient process management.

Thread priority values in AIX are recalculated at each clock tick (or time slice) and once per second. In the ps -ef command output, the C column increments with every clock tick that a thread actively runs, up to a maximum value of 120.

The default CPU scheduler, SCHED_OTHER, operates under a variable priority policy. If a thread is still running at the end of its time slice, it is penalized by being reassigned to a higher (less favorable) priority. This penalization allows other threads a chance to access the CPU. Every second, a global recalculation occurs, adjusting the priorities of all threads in the CPU queue—not just those currently running. This means all thread priorities are updated after every 100 time slices. Threads that voluntarily relinquish the CPU during their time slice also have their priorities recalculated.

This system ensures that all threads get a fair opportunity to execute when resources like CPU cores are limited. The OS cannot simply allow threads to run to completion or select them sequentially from the queue—doing so would cause the system to stall and potentially crash. To maintain system stability, the CPU must be shared efficiently, and this includes making time for critical kernel processes that handle internal housekeeping tasks essential to the health of the operating system.

A helpful analogy is a traffic intersection: Traffic lights allow vehicles from one direction to proceed for some time, then pause to let traffic from another direction flow. Similarly, the CPU scheduler ensures an orderly and fair distribution of CPU time among all processes.

In addition to the OS-level CPU scheduling, another mechanism is the Dispatch Wheel, part of the IBM PowerVM hypervisor. This governs CPU time entitlement for Shared Logical Partitions (SLPARs). While it operates similarly to the OS scheduling mechanism discussed here, the Dispatch Wheel manages resource allocation across virtualized environments.

The flowchart above clarifies how threads are dispatched and executed on a CPU, offering a solid high-level visual overview. While it doesn’t cover every scenario, it effectively illustrates the core concepts. This article introduces several key topics and provides in-depth details, but it only scratches the surface of the intricacies involved. Although there are always nuances and logistical details, it is as accurate as I understand it to be since the algorithm of the CPU scheduler is proprietary.

As an experienced AIX sysadmin and PowerVM engineer with a deep understanding of OS internals, I felt it was essential to share this knowledge. This article provides a clear overview of the complexity behind OS CPU scheduling, helping you appreciate the intricate processes happening when a program runs.

The information presented here should serve as a foundation to enhance your AIX OS tuning skills. For further insights, I encourage you to dive deeper into the subject. While they may not specialize in AIX, computer science professionals can offer a deeper understanding of CPU schedulers and their broader principles.

Though much of this information is proprietary, uncovering these details has been challenging and rewarding. I hope you find the same value in exploring this subject further.