Developing an Audit Process for Data Set Encryption

Two of the challenges of planning and implementing data set encryption under z/OS is how to determine whether data sets targeted for encryption are eligible and (during rollout) which have actually ended up encrypted. Because pervasive encryption can be a large project taking on many phases, those involved with implementation will want to have the ability to track progress. In addition, other teams such as your CISO organization, auditors and customers will also want to be apprised in order to asses risk and mitigate exceptions along the way.

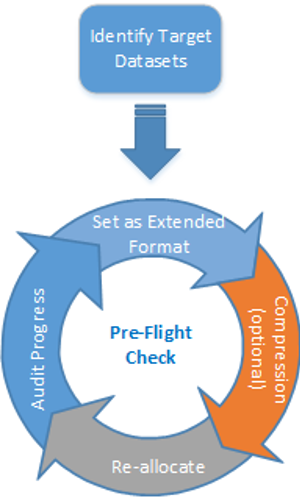

Before an organization determines the data sets to be encrypted, the implementation team must determine data set eligibility. For example BSAM/QSAM and VSAM data sets must be extended format although APAR OA56622 will soon broaden support allowing basic and large format data sets to be encrypted. Customers should consider which datasets are ineligible for encryption, along with other eligibility requirements. They should also consider compression as a way to reduce the size of data being encrypted (i.e. the use of z/EDC for BSAM and QSAM data sets). Once these attributes are addressed you can develop a pre-flight check to audit data set eligibility. A pre-flight check will examine target data sets and report on things such as data set name, creation date, SMS data class, whether the data set is extended format or compressed and more.

Pre-Flight Check Process

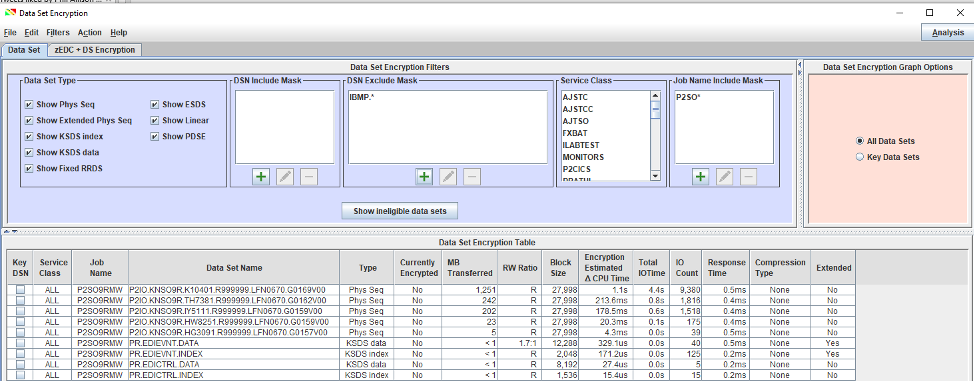

A pre-flight check can be done using internally developed tools or using the IBM Z Batch Network Analyzer (zBNA). The zBNA tool can be used by downloading an SMF batch extraction utility (CP3KEXTR) and downloading the zBNAInstallwithJava.exe file, then installing it on your workstation. If choosing zBNA, utilize the filtering capabilities to isolate the data sets you wish to encrypt. zBNA filtering has improved significantly over the past year with data set encryption in mind, and is very flexible. You can include (or exclude) certain data sets in a pre-flight check by type, using fully qualified data set names or masks using wildcards. You can also filter based on application or workload type using zBNA, to audit data sets targeted for encryption based on jobname/jobname mask or WLM service class. See Figure 1 to see a dataset encryption report filtered by jobname mask. Since zBNA uses SMF data for a given period, ZBNA will report on all data sets used based on the criteria you provide, even data sets deleted purposely at the end of a batch cycle or as part of housekeeping.

Using zBNA for a pre-flight audit does have some limitations, SMS data class is not reported so one can’t see if a data class is assigned. This means there will be some potential research needed to determine if the data class provides support for extended format or compression. Another problem is that zBNA doesn’t provide creation date either. Creation date is important because if a data class was recently changed to allow extended format or compression, or if an ACS rule was changed to assign a data class, creation date can determine whether the data set was recreated before or after the change. Since SMS drives the data class routine when a data set is created, reconciling creation date against the date of a data class change is extremely important. zBNA also doesn’t provide external security manager information.

‘Roll Your Own’ Pre-Flight Audit Process

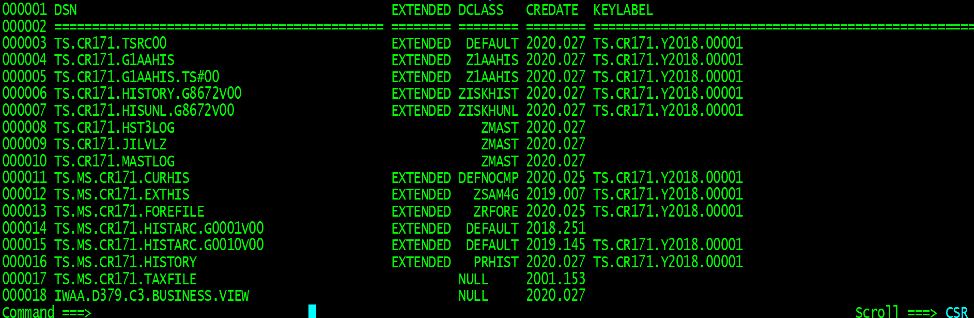

Because of these limitations, many customers may opt to “roll their own” pre-flight audit process. Rolling your own allows you to add another dimension to the audit, for example, interrogating RACF to determine which data set profiles protect each data set within the audit scope or interrogating user catalogs for information such as creation date. Although catalog metadata can be obtained by a LISTCAT or by using the Catalog Search Interface, many customers utilize powerful catalog management tools which can provide custom reporting on data sets and their attributes by data set name or data set mask. These tools can provide a more thorough view and will come in handy later as data sets become encrypted and encryption key information is retrieved from the encryption cell of the catalog.

Figure 2, below is one example of a roll your own audit using a third-party catalog utility. The audit criteria searches the catalog for a group of data sets targeted for encryption. This audit identified a number of data sets in rows 8-10 that are not extended format. The data sets on lines 17-18 have no data class and will have to be addressed via ACS routines or assigned to a data class via JCL. This particular audit also examines the encryption cell within each catalog entry showing what key label is being used to encrypt the file.

Once exceptions for extended format are addressed using SMS data class changes or JCL, in most cases data sets will need to be recreated. In certain cases this can be disruptive to the application. Each type of data set can be recreated differently, for example for a generation data group (GDG), the next generation created should pick up the extended attribute. In the case of VSAM files and non-GDG sequential files, the file will need to be recreated. If encrypting Db2 table space data sets, online reorg can be run, which is non-disruptive. Finally if you’re a zDMF customer, zDMF can be used to convert VSAM and non-GDG sequential files to extended format non-disruptively.

Reducing Overhead Costs

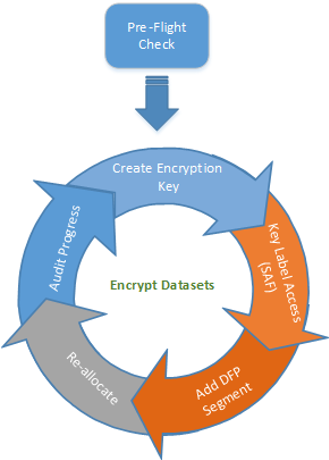

Many customers are sensitive to the overhead associated with encryption. z/OS DFSMS can be used to improve efficiency by compressing extended format files before they are encrypted. Two of the more popular compression methods are zEDC and generic compression. Depending on the hardware chosen, zEDC offloads compression using a specialized PCI card on the IBM Z host. On the IBM z15, zEDC compression functionality was moved to an integrated accelerator that resides on the processor chip. zEDC can compress BSAM and QSAM files at very high compression ratios. For customers who do not have zEDC, generic compression is an option that can be used for BSAM, QSAM and VSAM files. Either compression technique can be controlled by the DFSMS data class giving customers the opportunity to implement compression as they convert data sets to extended format. Because data set reallocation is a requirement which may not occur for all intended data sets at once, implementing extended format can be an iterative process. See Figure 3, below for a visual of this iterative process.

Implementing Data Set Encryption

Once data sets are eligible for encryption, the data sets you wish to encrypt must have a key label assigned to the data set and then be reallocated as a new data set. Before going down this path you must first decide where key labels for encrypting data sets will be controlled. The key label must pre-exist in ICSF and is essentially your magic data set encryption switch. Data set encryption gives you three options for supplying a key label for a data set. In order of precedence these options are:

- Via external security manager policy, for example a RACF DFP segment containing a key label within a data set profile

- By DD statement – where the key label is explicitly inserted within JCL, dynamic allocation, TSO ALLOCATE for a new data set (or IDCAMS DEFINE)

- Via DFSMS policy – using a key label within the DFSMS data class.

Since data set encryption is designed to protect encryption keys via access control, many customers will opt to use security policy. Using security policy provides a single point of control and avoids imbedding keys within JCL or burdening storage administrators the responsibility of assigning the proper key. The audit process used for the remainder of this article will describe an audit process using RACF as the controlling point for assignment of key labels.

As you prepare for encrypting data sets, another key ingredient is to provide necessary access controls so that only authorized users will have read access to the key label. When a key label is created and stored in ICSF, you must also define a SAF profile in the CSFKEYS class to protect the key from unauthorized use. Read access to the key label allows users with data set access to encrypt (write) or decrypt (read) data within the data set. In some cases access to the key label can be more restrictive than access to the physical data set. One example is excluding storage administrators from key label access, another example is limiting access to keys used to encrypt Db2 table space data sets to the Db2 subsystem.

Adding Encryption to the Audit Process

We’ve already identified data set information derived from the catalog in our pre-flight check. Assuming the key label within the DFP segment of a RACF data set profile is your magic data set encryption switch, you will also want to verify:

- What data set profile is protecting the data set? Is there a DFP segment with the expected key label?

- Does the key label of an encrypted data set match the key label defined in the DFP segment?

- Does the data set create date precede the day a key was added to the DFP segment?

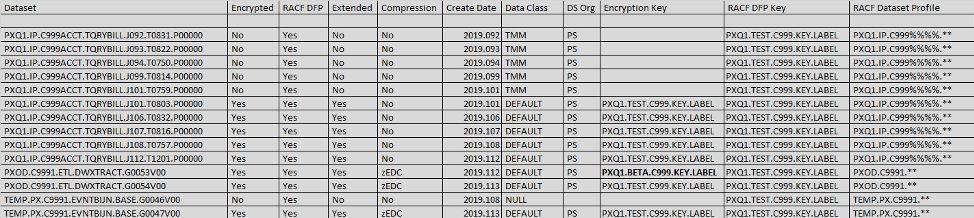

To answer these questions, the trick is to leverage your favorite programming language, SAS or REXX in order to join data from the pre-flight check with data derived from the ESM. This will take some parsing and matching of data between the catalog data elements and your ESM. If RACF is used as the ESM you will first want to query RACF for each data set to determine which RACF profile is protecting the data set. If the userid running the audit has access to RACF display commands, this can be done using the RACF LISTDSD command with the DFP sub-parameter. An alternative is to use output from the IRRDBU00 database unload utility. Record Type 110 can be used as within an audit to determine which data set profiles have DFP segments and the key labels defined to that data set profile. Using Figure 4 as an example, there are various conditions the audit can expose:

- Encrypted data sets with zEDC compression.

- Data sets that have a key label in the DFP segment but are not encrypted (no encryption key). In the case of the first five records below, the key was defined to the DFP segment but these data sets which share the same data set profile did not become extended format until sometime during the 2019.101 batch cycle.

- Data sets using a different key than what is defined in the DFP segment (data set PXOD.C9991.ETL.DWXTRACT.G0053V00). Is this the result of a key rotation or an error?

- Data sets intended for encryption but have a NULL data class. In the last example the data set was converted to a data class with extended format and compression after realizing this data set previously failed the pre-flight check.

As in the case of the pre-flight check, the audit process for encryption is also an iterative process. Due to availability needs, it may take multiple batch cycles or events to complete the process. One example is with Db2 Table Space data sets. It may be undesirable to perform hundreds or thousands of online reorgs at once, having an audit allows you to chart your progress through the endeavor. Since granularity of RACF dataset profiles may vary (generic to specific), Figure 5 below depicts how a number of cycles may be required to encrypt a large number of datasets protected by a single key.

Putting Audit to Good Use

Once a target environment has been encrypted, monitoring should be put in place to make sure data continues to be encrypted. The data set encryption audit process can be run on daily, weekly or similar interval to ensure permanent and temporal data sets are in compliance. It may also be important to determine whether the output from the audit needs to be archived in the event data is later found to be compromised or it there are compliance reasons within your industry. An important topic to address is who will be the consumers of the audit data. Certainly the parties responsible for key management and assignment of keys need to be involved especially during events such as key rotation ceremonies. Finally, an organization should discuss whether certain outcomes exposed by the audit are considered actionable events which need to be emitted to your SIEM solution or logged as an exception.