Use Nginx to Make Web Content Zoom!

In this blog from Jesse Gorzinski, learn why nginx might be the biggest hidden gem in the open-source ecosystem

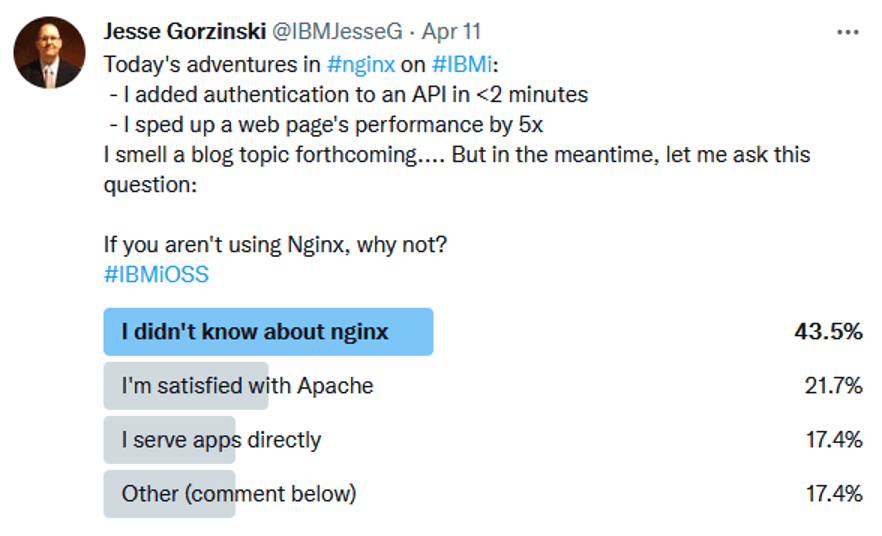

In my previous blog post, I talked about some open-source “hidden gems.” They can do everything from processing JSON to analyzing disk space. Coincidentally, as I started this blog, I opened a poll on Twitter. Of course, this data set isn’t significant enough to form large conclusions, but I was surprised by the results. I was expecting Nginx’s non-users to simply be satisfied with the well-established Apache HTTP server for IBM i. Instead, a decent number of people are serving apps directly, or simply didn’t know about Nginx. This made me wonder … is Nginx the biggest hidden gem?

It is often recommended that you host web applications and APIs behind an HTTP server such as Nginx or Apache. The HTTP server can handle many things to help make your application production-ready, for instance:

- Queuing connections when under heavy load

- Handling TLS. Note that HTTP Server for i integrates with Digital Certificate Manager (DCM), whereas Nginx does not.

- Filtering various HTTP headers, etc.

- Handling authentication

- Serving static content (often more efficiently than a high-level language application server)

- Handling multiple languages/applications behind a single virtual host

- Provide logging of HTTP requests

Today I will focus on improving the performance of your web workloads. Nginx’s capabilities provide several mechanisms to speed things up. The best approach depends on the type of workload you are serving. I generally classify web content into one of four categories: Static content; dynamic and occasionally changing content; dynamic and rapidly changing content; and real-time information. Nginx offers something for each of these types, regardless of which programming language you are using.

Category 1: Static Content

Static content still accounts for a large portion of web traffic. This includes not only HTML but also JavaScript files, images, etc. It’s best practice to store static content in a separate directory from programming logic, and just about every web framework provides a simple way to serve up this static content. For instance, Express.js has a one-liner static() function.

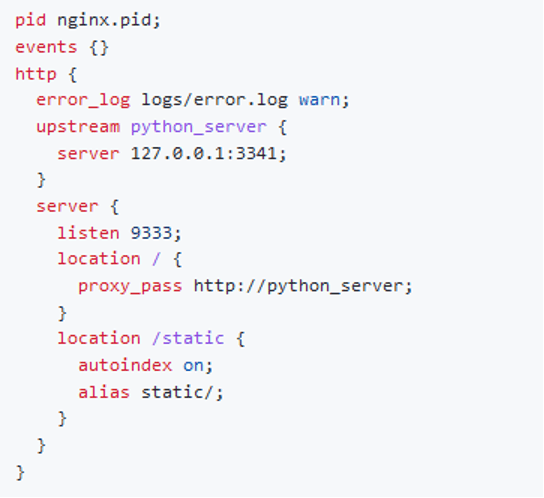

If your application code is hosted behind nginx, though, you can serve this content from nginx directly. This way, your web framework doesn’t need to deal with it. The nginx configuration in Figure 2 provides an example. In this case, nginx is listening on port 9333. It handles the static content directly, and proxies everything else to a backend Python server on port 3341.

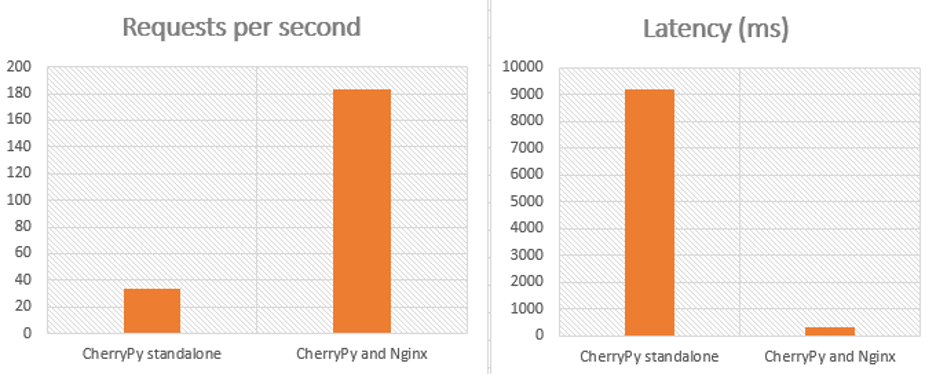

Why would you want to do this? Simply put, the HTTP server can handle static content more efficiently than a high-level language framework. For demonstration purposes, I hosted a small web page consisting of static content and measured performance under heavy load: 10 concurrent connections (more intensive than 10 users) on a single-CPU IBM i system). I compared the performance of CherryPy, a multi-threaded web framework, running standalone against the performance of CherryPy behind nginx. The results, shown in Figure 3, were compelling. I saw about a 5x increase in the number of requests per second. The latency improvement was even more substantial!

Category 2: Dynamic, Occasionally Changing Content

People often classify web workloads as either static or dynamic, but it is important to further differentiate between the various types of dynamic workload. Let’s say, for instance, your API or web page provides weather forecast information. Surely, that data is “dynamic” as it changes over time. However, it isn’t constantly changing. Maybe there are some changes every hour or two.

In these cases, you can leverage the oldest trick in the web-performance book: content caching. After all, why ask your program to recalculate the data on every request for something that only changes periodically? Instead, you can simply cache this information for a time interval that makes sense (15 minutes? 30 minutes?). When your web site or API is under heavy load, this trick can help considerably.

Of course, caching itself has its own overhead, so a number of factors should be considered. For instance, how many requests do you handle per second? What percentage of those are cache “hits?” When in doubt, a bit of trial-and-error will go a long way.

Category 3: Dynamic, Rapidly Changing Content

In other cases, the content might be changing more rapidly. For example, an API might expose current inventory levels or GPS coordinates of a moving delivery vehicle. It’s natural to think that caching is not a good fit in this scenario. If your application is under heavy load, though, caching can still have its benefits.

That’s where microcaching comes in. The term microcaching means exactly what one would guess: caching content for small intervals of time. After all, stale data might be fine if it’s only a few seconds from reality. A truck’s GPS coordinates, for example, would still be useful if it represents the truck’s position three seconds ago!

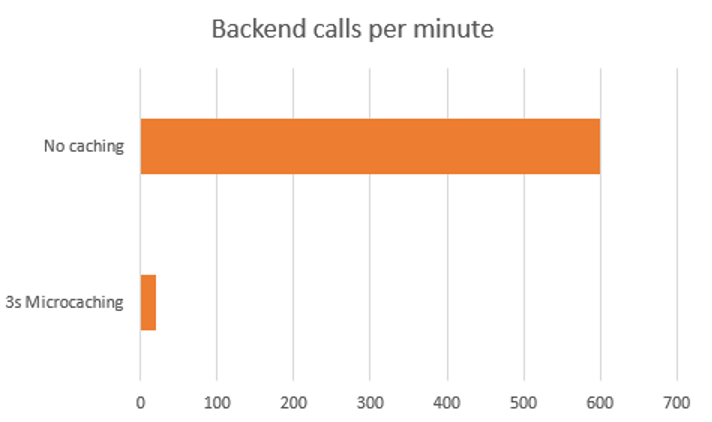

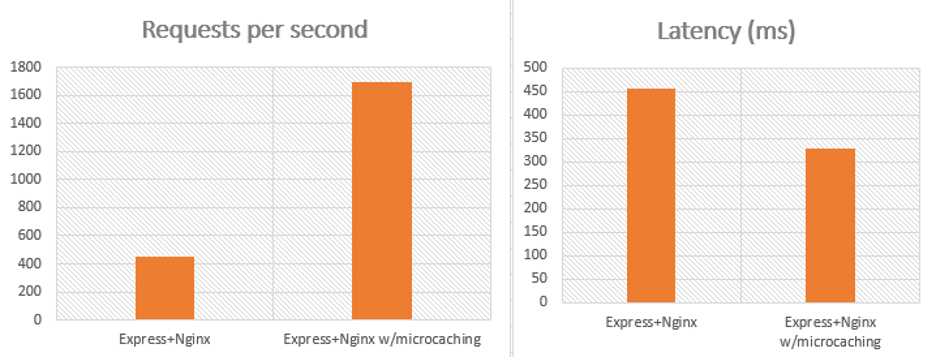

Microcaching is especially useful when you are servicing multiple requests per second. For instance, with a three second caching tolerance, the back end need only make 20 calculations per minute, regardless of how many API requests are handled. As an illustration, Figure 4 demonstrates how much impact this can make when handling 10 requests per second.

Of course, the more load your web server is under, the greater the advantage. To see the benefit, I created a small Db2 API with Express.js and benchmarked with 100 concurrent connections. Using three-second microcaching, we were able to handle about 5x the workload!

Category 4: Real-Time Information

For some cases, the data needs to be as live as possible. Naturally, caching can’t help here. If you need to handle real-time requests on a large scale, you might need to spread the work across multiple jobs, or even multiple systems. No problem. Nginx is great at load balancing!

The concept of load-balancing is simple. Several backend servers (Python, PHP, Java, etc.) can be handling requests, but the clients just connect to a central nginx server that distributes the work accordingly. In addition to potential performance benefits, it also adds a level of resiliency. If one of the backend servers crash, the website or API can continue to function. For a visualization, see Figure 6.

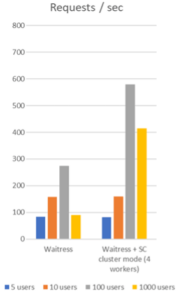

Naturally, I had some fun measuring the benefits here, too! In this case (see Figure 7) I measured a Python Waitress Db2 program running a single instance, versus load-balanced across four workers (by way of Service Commander‘s “cluster mode”).

Because of the possible scalability and durability benefits, load balancing is a common sense step for leveraging more of your hardware resources to serve content.

What About Apache?

As you probably know, the Apache HTTP server has been available on IBM i for many years. In fact, the performance-boosting techniques I discussed today can be done with Apache, too! Even further, I’d expect performance to be comparable to nginx most of the time.

Why, then, might we want to use nginx? First off, it’s important to understand the key differences between Apache and nginx. They are two different implementations of an HTTP server, so they naturally have their variations. One can find plenty of opinions on the internet! On IBM i, there are some additional differences to note. Namely:

- Nginx is available as a PASE RPM. Apache is provided as part of the IBM HTTP server for i (5770-DG1) product.

- For handling secure connections, Apache HTTP server integrates with Digital Certificate Manager (DCM). Nginx uses industry-standard configuration and OpenSSL.

- Nginx runs entirely in PASE. Parts of Apache run in ILE.

- Nginx can run inside chroot containers. Apache cannot.

So, which do you choose? The answer often boils down to things like available skill or personal preference. Personally, I’m an nginx fan because I find it easy to configure and manage, but someone else could reasonably claim the opposite. If building a production application, my recommendation is to try them both before you decide.

I will, however, make one strong assertion: Don’t run without an HTTP server in front of your application. The HTTP server can make your life a lot easier and can make your website or API faster and more secure. Don’t believe me? Read the docs for your favorite web framework. Odds are it calls out the need for a frontend HTTP server, like the Express.js or Gunicorn documentation, for example.

Closing Thoughts on Nginx

As you can see, nginx is a pretty useful addition to the open-source ecosystem! If you’re interested in getting started, we have some basic information about Nginx on IBM i on our open-source documentation site here, as well as several community portals documented on the IBM i open-source resources page. Certainly, your web workloads can be blazing fast!