Best Practices for AIX in a SAN Environment: Part 1

AIX supports different SAN topologies, switches and storage servers from multiple vendors. This represents challenges, due to the fact the interoperability among vendors is less than ideal. Therefore, one important aspect to get a reliable AIX is to follow the best practices for the SAN environment. In this article, I want to explain the major best practices for AIX in a SAN environment, such as storage systems, FC connectivity and multipathing recommendations.

General Best Practices

One general rule of thumb is to avoid making changes if the performance is OK, because what could help in one situation may create an issue in other.

Having said that, it’s also important to check the storage vendor site and follow its recommendations. AIX can work with storage systems from different vendors. However, all of these combinations have unique requirements. From my experience, I’ve found it really helpful to read the host attachment or host connectivity guides from the storage vendor. These documents provide the best practices between the storage server and the most common OSes, including AIX. For instance, if you’re using an IBM Storwize system, you can read the best practice Redbook for IBM Storwize V7000.

Additionally, check the interoperability matrix from the storage vendor. Interoperability problems are notoriously difficult to isolate and can take a long time to obtain a fix from the vendor. For that reason, it’s a good idea to review this matrix before making big changes. For instance, the IBM System Storage Interoperation Center (SSIC) provides interoperability requirements for IBM storage. Also, be sure to avoid different FC switch vendors, except when running migrations.

SAN Topologies and Zoning

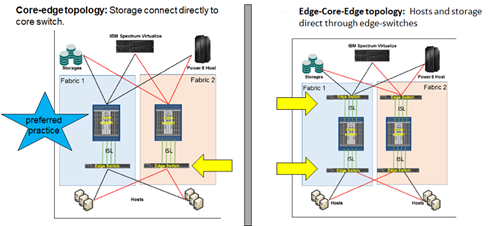

Unique combinations of AIX configurations, SAN equipment, topologies and zoning each need unique recommendations. However, there are basic principles to consider which we can rely on. For example, there are different SAN topologies such as edge-core-edge, core-to-edge or full-mesh. These topologies fit better for certain demands. However, the best practice for AIX is to use the core-to-edge topology with director-class switches. In this scenario, you connect the VIOSes and high-demanding AIX LPARs to the director-switches. This configuration is a best practice because there are zero hops for AIX or VIOS hosts, resulting in improved I/O latency, avoidance of bottleneck and buffer starvation problems for AIX LPARs.

On the other hand, the hosts with medium to low I/O requirements should use edge-switches, to save the expensive director switch ports. The result is a cost-effective configuration that guarantees the best performance for the AIX hosts.

Additionally, all SAN topologies should always be divided in two fabrics with Failover configuration. This means, all storage servers and hosts should be connected to two different fabrics. This ensures redundancy by using multiple independent paths from the hosts to the storage system. Figure 1, below, summaries the best practices for SAN topologies:

Another important consideration is the zoning configuration. Zoning is used to keep the servers isolated from each other and controls which servers can reach the LUNs in the storage server. SAN Zoning is important to avoid boot problems and paths issues on AIX. In this regard, there are two types of zoning: hard zoning and soft zoning. The best practice is to use soft zoning—that is, creating zones using only the worldwide port name (WWPN), for individual ports (single-host port) with one or more target (storage system) ports. Keep in mind, NPIV configurations require zoning using the WWPN.

Multipathing Best Practices

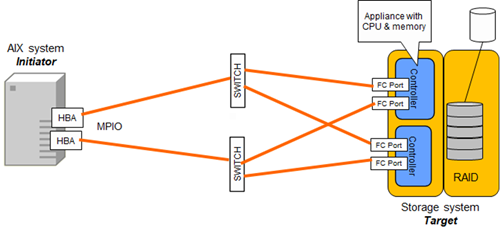

Multipathing provides load balancing and failover capabilities at the host and FC communication. The main idea is to provide redundancy all the way down to the storage server. Figure 2 illustrates the multipathing concept:

As you can see, multipathing provides better performance and high-availability, because of the use of multiple paths, but the best practice for AIX for the number of paths is to have between two to four paths, despite the fact that AIX can support up to 16 paths. Limiting the number of paths available to AIX is can reduce the boot time and cfgmgr, and improve error recovery, failover, and failbacks operations. In addition, the suggestion for AIX with VIOSes is to use NPIV with dual-VIOS, since it provides better performance with less CPU consumption. In contrast, the use of vSCSI imposes higher latency and imposes higher CPU consumption.

MPIO software is always of special consideration because many problems related to paths, boot and performance problems come from this. A recent trend is that non-IBM vendors are supporting the AIX native MPIO along with the ODM storage driver. However the best recommendation is to check your storage vendor. For example, when using IBM Storwize and SAN volume controller systems along with POWER9 processor-based systems, the best practice is to use AIX native path-control module PCM (AIXPCM). The AIXCMP is integrated into the AIX base OS, thus removing the need for separate maintenance activities related to updating the PCM.

It’s also important to check these MPIO attributes for hdisk devices. You’ll find the right values for these parameters with your storage documentation:

- I/O Algorthims (algorithm): Determines the way the I/O is distributed across paths

- Health check mode (hcheck_mode): Determines which paths are probed with the health check capability

- Health check interval (healthcheck): Determines how often the health check is performed

- Reservation policy: Required for all MPIO devices

- Queue depths: Specifies the number of I/O operations that AIX can concurrently send to the hdisk device

Keep in mind, the names of these parameters may change depending on the MPIO software. For instance, the following example modifies the predefined attributes with best configuration to operate with a DS8K storage:

- # chdef -a queue_depth=32 -c disk -s fcp -t aixmpiods8k

- # chdef -a reserve_policy=no_reserve -c disk -s fcp -t aixmpiods8k

- # chdef -a algorithm=shortest_queue -c disk -s fcp -t aixmpiods8k

In addition, check the following attributes for the Fibre Channel protocol driver (fscsiX) and the Fibre Channel adapter driver (fcsX). I’m adding the most common suggested values :

- FC ERROR RECOVERY (fc_err_recov): Switch fails I/O operations immediately without timeout if a path goes away. Recommended value: fast_fail

- Dynamic Tracking (dyntrk): Allows dynamic SAN changes. Recommended value: yes

- Number of command of elements (num_cmd_elems): Defines the number of I/O operations can happen in the HBA at the same time. Increase it with caution since it can lead to boot problems or saturate the storage server. Minimum value: 1000

- Maximum transfer size (max_xfer_size): How big the block I/O size can pass over the HBA port. Recommended value: 0x200000

The following commands illustrate how to change the above-mentioned attributes:

- # chdev -l fcs0 -a max_xfer_size=0x100000 -a num_cmd_elems=4096

- # chdev -l fscsi0 -a fc_err_recov=fast_fail dyntrk=yes

For the FC HBA tuning attributes, follow these best practices: Complete a performance analysis on the adapters and change the values with fcstat before making changes. Do not increment values without an appropriate analysis and increment values gradually, following the storage documentation.

Final Storage Server Recommendations

I always try to avoid using different storage servers with the same FC adapter on AIX, since when tuning parameters to improve performance for one storage server, I may get a negative impact on the other storage server. Actually, I always try using only one storage server for LPAR, because I’m going to need multipath I/O software for each of these storage servers and AIX can get confused with different MPIO software, even more when problems occur. Even more, for virtual environments, create dedicated VIOSes by storage vendors. That is, have two dedicated VIOSes to the DELL-EMC storage server and two others for the DS8K server. This makes easier the troubleshooting and tuning. Finally, never use a single Fibre Channel adapter to talk both disk and tape, because the structure of the I/O for a tape drive is much different than into a disk and you can run into poor performance.

So far, I’ve discussed best practices for AIX from the SAN perspective. In my next article, I’ll discuss the best practices from AIX perspective.