AI With IBM i: 11 watsonx Features You Never Knew Existed

The second article of the "AI With IBM i" series focuses on the deep menu of capabilities found within watsonx, from scientific prompt experimentation to custom LLM tuning

Editor’s note: This is the second article in the “AI With IBM i” series. Read the introductory article here.

If you’ve toyed around with watsonx, you already know it’s a powerhouse for deploying AI models, ensuring governance and creating data pipelines. But beneath the surface lies a treasure trove of hidden tools—stuff that makes seasoned users blink, “Wait … watsonx does that?”

Today, we’re diving into the secret menu: features that are powerful, playful and ready to level you up. By the end, you’ll have fresh ideas to excite your team, dazzle your boss or even convince the skeptics to finally give watsonx a spin.

Feature 1: Prompt Lab – Evaluate Like a Scientist

What It Is

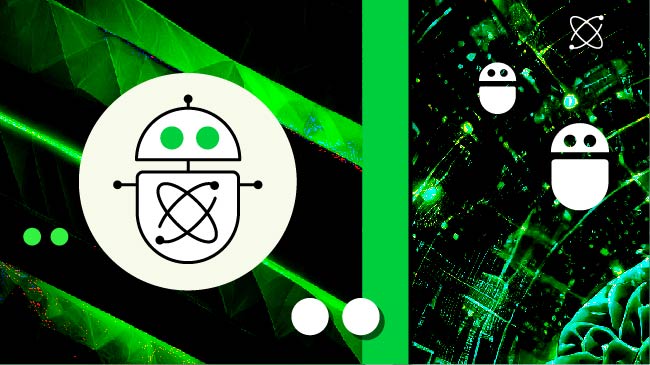

Prompt Lab in watsonx.ai isn’t just a playground; it’s your personal research and development space for testing and refining prompts. Enter any question and see results in real time. Prefer more control? Use the structured prompt template to guide the model with example input-output pairs that teach it tone, formatting or behavior.

Need more flexibility? You can even disable AI guardrails, allowing the model to generate responses that other platforms might block, which is useful for exploring sensitive topics in highly controlled enterprise environments.

Figure 1. Prompt Lab generation. (Source: Prompt a Foundation Model)

Why It Matters

Crafting the right prompt is part art, part science, and Prompt Lab gives you the tools to master both. By allowing structured examples, you can steer the model to fit your business voice, task format or compliance needs.

The option to turn off guardrails gives advanced users the freedom to:

- Explore edge cases

- Validate high-risk scenarios

- Test agent behavior under real-world inputs

This is especially valuable in industries like legal, security or research, where raw, unfiltered output can surface insights or failure points that sanitized models would otherwise hide.

Ever wish your prompts came with receipts? Prompt Lab gives receipts by associating which responses came from which models with its exact parameters. Finally, you can prove which version actually performs better. Cue the boss nodding.

Example

Say you want to generate product descriptions in a specific format. In Prompt Lab, you provide a few examples showing the layout and tone you want. You test different models and settings, and Prompt Lab tracks which one gave the best result, so you can repeat it with confidence.

Feature 2: Granite and Third‑Party Foundation Models

What It Is

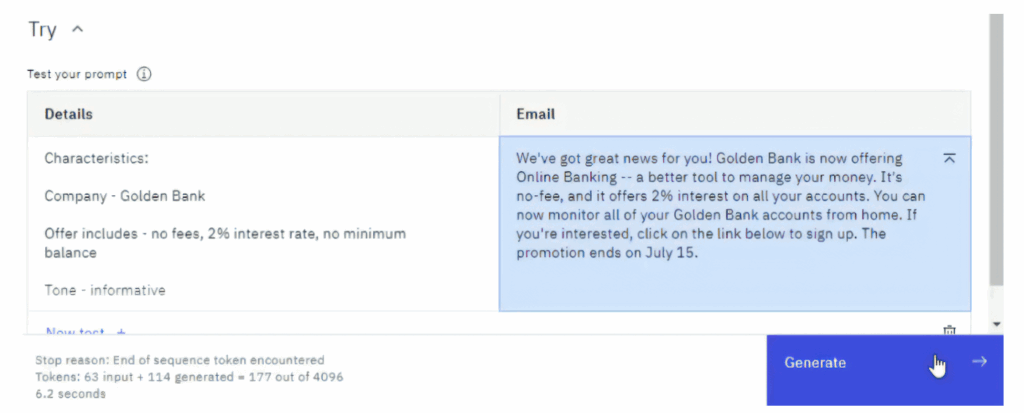

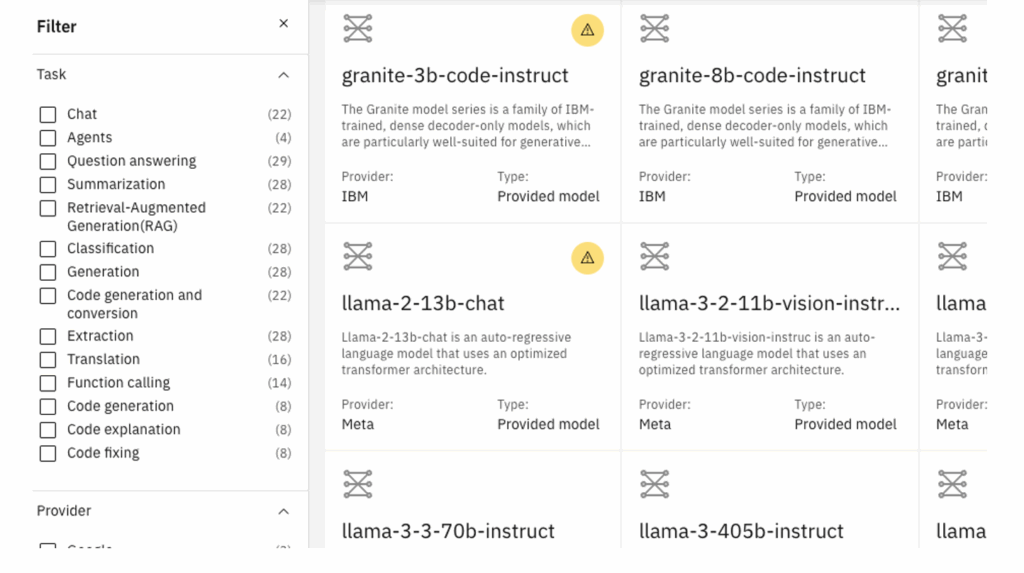

Under the hood, watsonx includes IBM’s Granite LLM series of models. Not only have they been trained on trillions of tokens, but they have also been specifically trained on enterprise-curated data sets in domains of internet, academic, code, legal and finance. Plus, thanks to partnerships with Meta, Mistral and Microsoft, you can seamlessly pick up Llama 3, Mistral, All-mini or other third‑party models on watsonx.

Figure 2. watsonx model selection. (Source: watsonx.ai)

Why It Matters

Each foundation model has strengths. Need tight control over code generation? Use Granite ‑Code. Want bleeding‑edge conversational nuance? Select Llama 3. And the best part? You can do all this in the same studio. One advantage of being able to select a specific model is that you can use smaller models that suit your use case. A coding model doesn’t need to be trained on the works of Shakespeare in order to write good code, and thus you can save on memory and compute.

Example

Say you’re building a compliance chatbot. You spin up Granite (which has been explicitly trained on loads of enterprise-grade legal data), fine‑tune it with internal policy docs and deploy—all without juggling external APIs or licenses. If next week you need multilingual support, swap to Granite-multilingual.

Feature 3: Built-In Bias and Drift Detection for Governance

What It Is

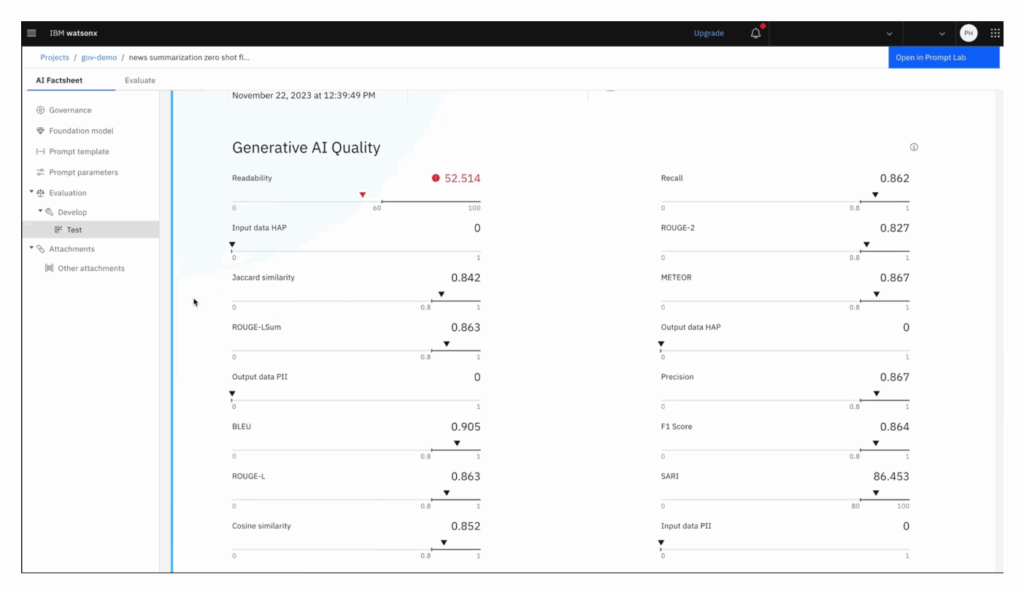

When you enable watsonx.governance, you’re not just checking a box for compliance. You’re getting tools that monitor your AI for bias, drift, explainability and data privacy violations—automatically.

These aren’t just reports that sit on a dashboard collecting dust. They actively flag models that need retraining, or ones that are treating certain user groups unfairly.

Figure 3. watsonx.governance evaluation. (Source: AI Model Governance)

Why It Matters

In 2025, responsible AI isn’t optional. With laws like the EU AI Act and NYC Bias Audit Law coming into play, orgs need audit trails and bias transparency. watsonx.governance doesn’t just let you record fairness metrics—it lets you enforce thresholds, automate actions and log model decisions with full traceability.

Example

A bank’s credit risk model starts showing a drift in predictions for a particular ZIP code. Governance catches it, logs it and triggers an alert to the machine learning (ML) team, who discover the model is overreacting to inflation trends. You roll back, retrain and update, all within the same platform. It’s like having a compliance co-pilot sitting behind you, whispering, “Psst… that model’s starting to act shady.” And in this age? That voice might just save your job.

Some notable companies using watsonx.governance to ensure model compliance include the US Open and Infosys!

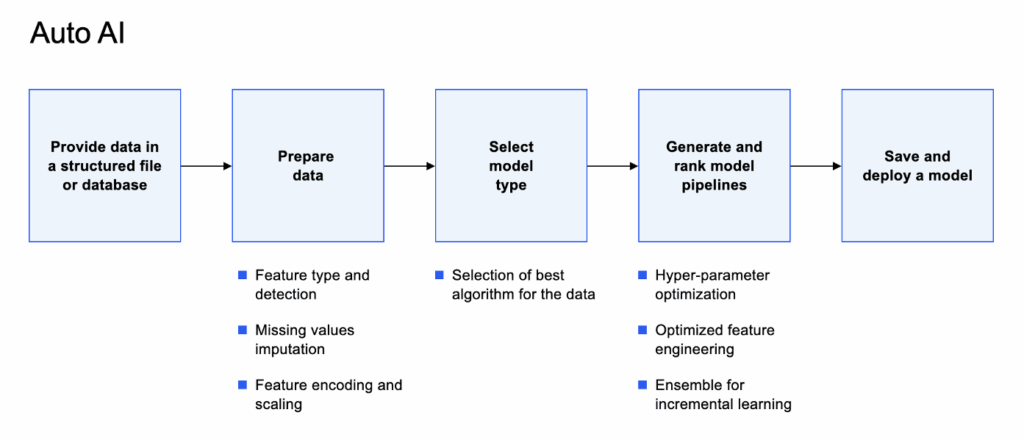

Feature 4: AutoAI Isn’t Just for Beginners; It’s a Secret Weapon

What It Is

At first glance, AutoAI looks like a nice training-wheels feature—pick a dataset, click go and watch the magic. But under the hood, it’s a full-blown pipeline builder that handles preprocessing, feature engineering, model selection and even hyperparameter tuning.

It doesn’t just pick a model; it builds, compares and explains dozens of them in minutes. Think of it as your own AutoML intern who never gets tired and documents everything.

Figure 4. watsonx Auto AI pipeline. (Source: AutoAI Overview)

Why It Matters

Experienced data scientists often skip AutoAI thinking it’s too basic. Big mistake. AutoAI’s sweet spot is in rapid prototyping and experimenting with different ways of preparing and transforming data. You can even export the code to access your model in the programming language of your choice.

Example

You’re asked to predict customer churn. Normally that’s hours of data wrangling, modeling and testing. With AutoAI, you upload the dataset and click a few config options, and in 15 minutes you’ve got:

- A leaderboard of top models

- An ROC Curve and a table of model evaluation metrics

- An auto-generated notebook ready to be tweaked

It’s like discovering your blender has a “make gourmet sauce” button after years of only using it for smoothies. AutoAI isn’t just fast—it’s good. You’ll wonder why you’ve been grinding through pipeline code by hand all this time.

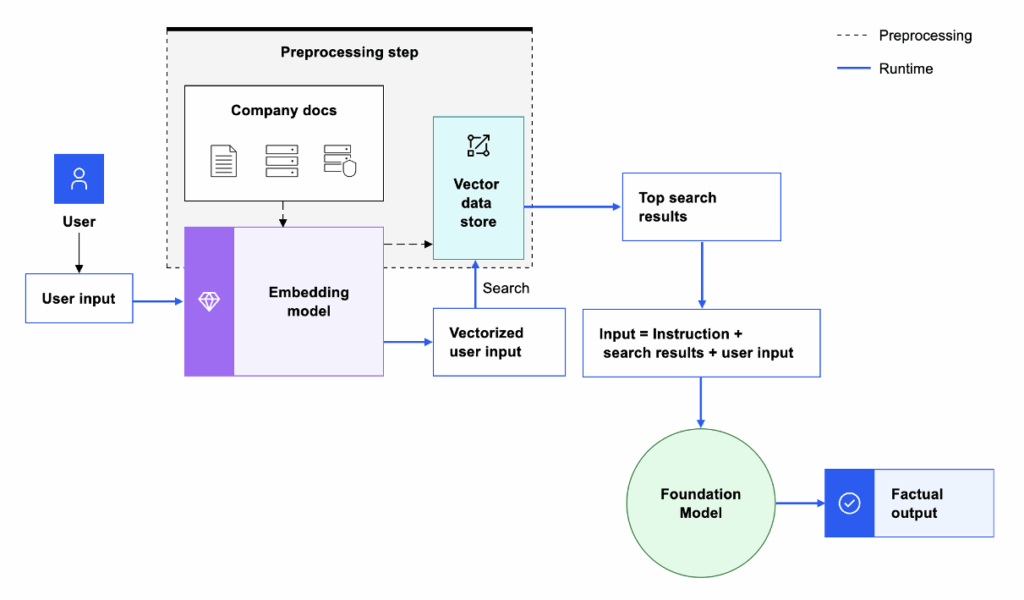

Feature 5: Vector Search + RAG: Build Smart, Searchable Apps Fast

What It Is

Let’s talk about what powers GenAI apps that actually feels smart: RAG, or Retrieval-Augmented Generation. In watsonx, you can build RAG pipelines by connecting:

- A vectorized document corpus (your PDFs, docs, notes)

- A vector search engine (built-in)

- A Granite or third-party LLM (like Llama 3)

Why It Matters

Most LLMs hallucinate because they don’t know your domain. With RAG, you give them a brain filled with your content. Users ask questions, and the LLM responds based on the real stuff—not just its training data.

watsonx lets you vectorize content, store embeddings and integrate it into a custom prompt flow, all with minimal code and zero infrastructure fiddling.

Figure 5. RAG Architecture. (Source: Retrieval-augmented generation (RAG) pattern)

Example

Your HR team wants a chatbot that answers questions about benefits. Instead of training a whole model, you vectorize the benefits PDFs, load them into the index and connect it to Granite-Instruct. Done. Now it gives grounded, accurate answers 24/7.

This is the kind of “aha” moment that makes non-tech execs lean in. “Wait, you built that in a day?” Yes. Yes, you did.

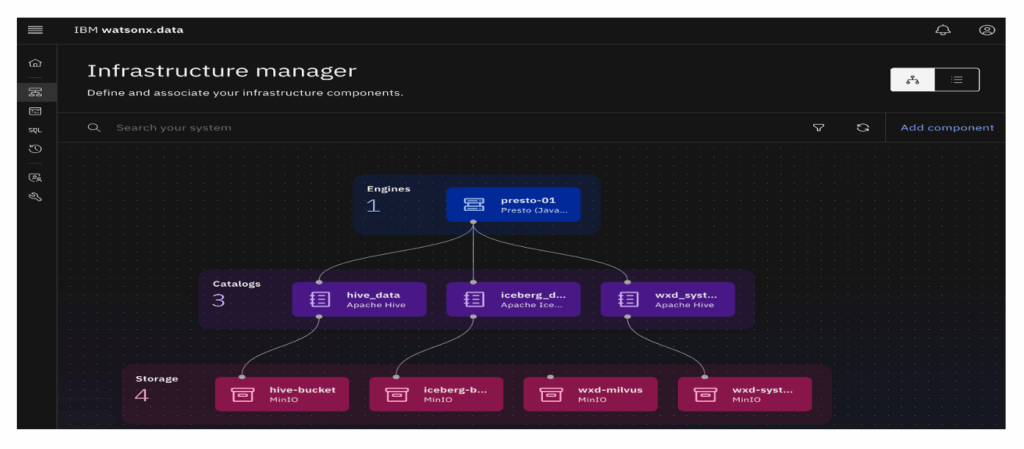

Feature 6: watsonx.data – Lakehouse, but Actually Useful

What It Is

Most enterprise data lakes are either glorified data swamps or locked into a single vendor’s stack. watsonx.data flips the script with an open lakehouse architecture that speaks fluent enterprise: Iceberg tables, multi-engine querying (Db2, Presto, Spark) and elastic compute that scales like the cloud-native pro it is.

You want to run pandas on Parquet? Cool. Need to hit object storage, relational tables and Hadoop in the same query? watsonx.data says “go for it.”

Figure 6. watsonx.data Infrastructure Manager. (Source: watsonx.data)

Why It Matters

The old way: dump data into one place, copy it a dozen times for every team, spend half your budget on storage. The new way: query where your data lives, keep governance tight and scale compute only when you need it.

It’s also built with data lineage, access control and compliance in mind, so legal, finance and AI teams can stop fighting over who owns what.

Example

Say your risk analytics team needs five years of customer transaction logs, which are half in Db2, half in an S3 bucket. Instead of ETL purgatory, they just run a federated query in watsonx.data, join everything on the fly and pipe it straight into their modeling pipeline.

Suddenly, your data lake actually delivers insight, not just storage bills.

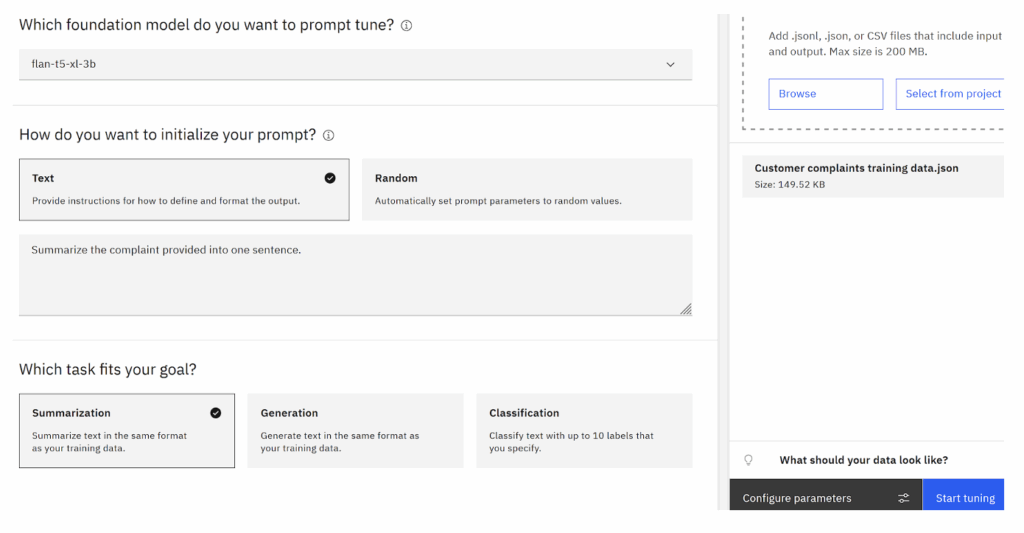

Feature 7: Custom LLM Tuning With Your Data, No GPUs Required

What It Is

Fine-tuning a large language model used to mean spinning up GPU clusters and praying your bill wouldn’t bankrupt you. With watsonx.ai, IBM has streamlined the entire process into a managed experience, so you can fine-tune Granite models (or others) right inside your project workspace.

And here’s the kicker: it’s optimized to work efficiently even with small data sets and enterprise-focused tasks—think classification, summarization or instruction following.

Figure 7. Fine-tuning a foundation model. (Source: Tune a Foundation Model)

Why It Matters

Enterprises have highly specific language: legal, financial, technical jargon. Off-the-shelf LLMs won’t cut it. Fine-tuning lets you inject your own tone, terminology and workflows into a model. watsonx.ai lets you do this without infrastructure headaches or complex ML pipelines.

Example

Your customer support team has a goldmine of chat transcripts. You feed them into a fine-tuning run with a Granite model. The result? A helpdesk assistant that sounds just like your top-tier reps and knows your business inside-out.

This is when IT managers go from skeptical to impressed. “You fine-tuned a legal summarization model over lunch?” Yep. And you didn’t even touch a GPU console.

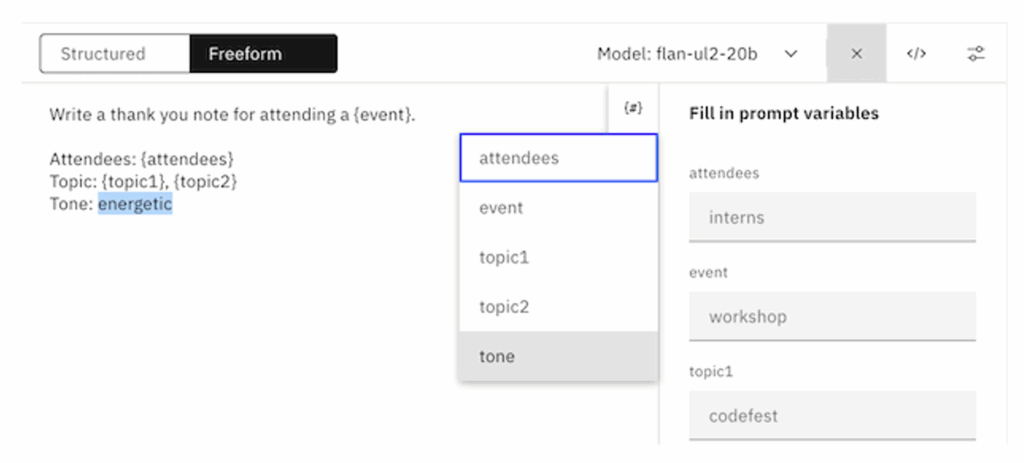

Feature 8: Prompt Templates That Actually Scale (and Save Time)

What It Is

watsonx includes a prompt template builder that lets you define reusable prompt assets with dynamic variables and structured examples. You can create both single-turn and chat-style prompts, inject runtime values and test them directly, making it easier to scale prompt engineering in production environments.

You can even version them, assign owners, and plug them directly into apps or pipelines.

Figure 8. Using Prompt Lab to create a Prompt Template. (Source: Building Reusable Prompts)

Why It Matters

Manually tweaking prompts for every use case is a recipe for madness. Prompt templates let you standardize your best prompts across departments, whether it’s summarizing emails, rewriting legal clauses or generating code snippets.

Better still, templates can be tested, evaluated and deployed with or without guardrails.

Example

Your legal team crafts a bulletproof NDA clause rewriter. You templatize it, lock down the structure and publish it for the sales team to use in their CRM workflows. Now, sales gets speed, and legal keeps consistency.

It’s the difference between one-off genius and scalable excellence. Your best prompt doesn’t belong in a sticky note—it belongs in a versioned, audited, shareable template that everyone can use (without breaking it).

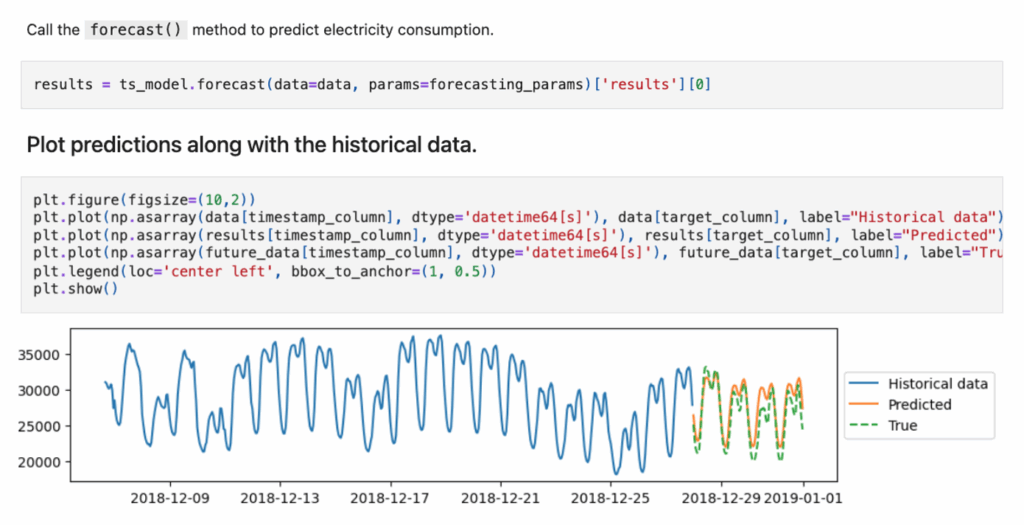

Feature 9: Forecasting With Granite TTM – Time Series Superpowers, No PhD Required

What It Is

Forecasting is notoriously tricky. It usually requires domain knowledge, data wrangling, model selection and tuning. But with watsonx and IBM’s Granite TTM (Tiny Time Mixer) models, accurate forecasts are finally within reach for teams without a data science army.

Why It Matters

What makes Granite TTM different? Granite TTM is IBM’s foundation model for time series forecasting. It’s pretrained on vast amounts of multivariate data, meaning it already understands patterns like seasonality, trends, sudden spikes and cyclical behaviors across industries.

You don’t need to train it. You don’t need to fine-tune it. Just point it at your data (think: sales, energy usage, supply chain signals), and it’ll generate a forward-looking forecast — often outperforming traditional models, even in zero-shot mode.

Figure 9: Forecasting energy data using Granite TTM. (Source: Using Time Series Foundation Models)

Example

Say your operations team wants a 12-week demand forecast. You load your historical sales data into watsonx, select a Granite TTM model (like granite-ttm-r2) and specify how many future steps you want. The model handles the rest, detecting seasonality, smoothing anomalies and returning confidence-banded predictions you can immediately use for planning or dashboards.

This isn’t just forecasting—it’s forecasting with a foundation model brain. No training. No manual tuning. Just results. Accurate, interpretable, enterprise-grade forecasts in minutes.

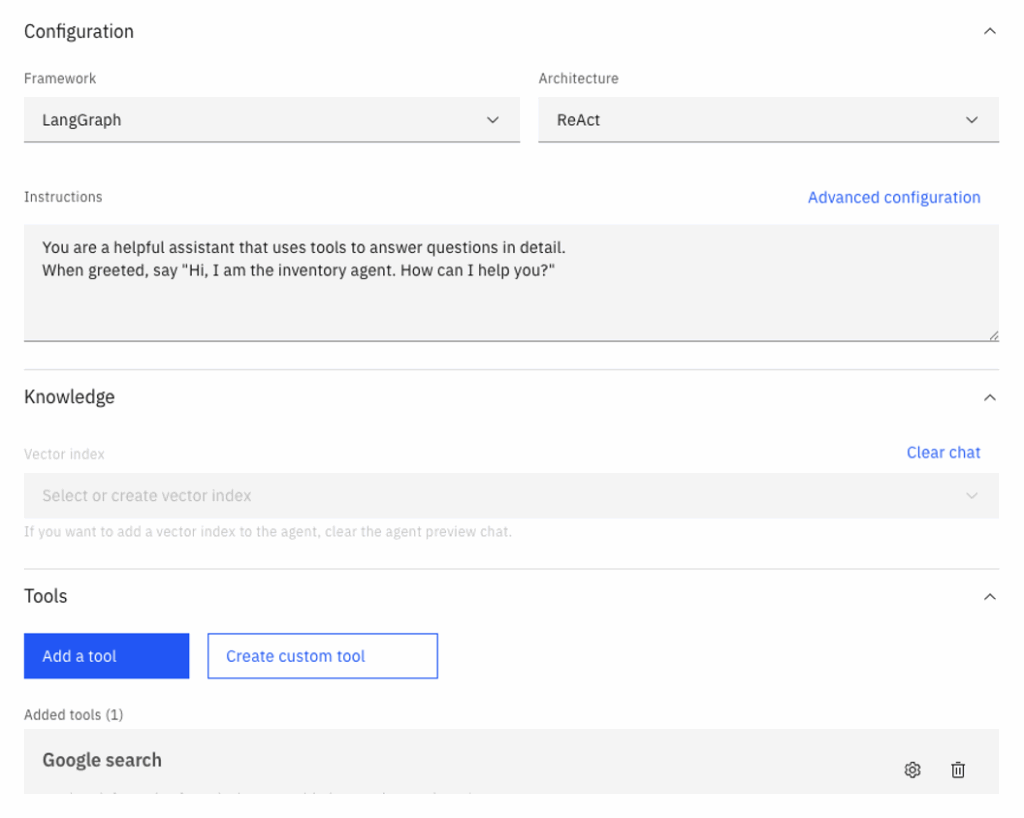

Feature 10: Agentic AI – Your Data’s New Favorite Coworker

What It Is

Think Granite, but instead of just answering questions, it acts on them. Agentic AI in watsonx connects language models with tools, data sources and APIs—so they don’t just tell you what to do, they actually do it. These agents really start to shine once you integrate with your own custom APIs by using the “Create custom tool” button.

Agents can retrieve data, call functions, kick off workflows, summarize results and even make decisions based on your rules. It’s like giving your LLM a to-do list and watching it check off the boxes, without human babysitting.

Figure 10. Building a custom agent to manage inventory on watsonx. (Source: watsonx.ai)

Why It Matters

Your business processes aren’t just conversations; they’re actions: generate a report, update a ticket, fetch the latest inventory snapshot. Regular LLMs stop at text. Agentic AI keeps going. With watsonx, you can build these agents directly into your enterprise apps, with governance, logging and safe execution built in.

Example

Your supply chain team wants to know if any parts are at risk due to port delays. An agent hits your logistics API—which you’ve defined as a custom tool—cross-references it with supplier locations, pulls the top five risk areas and writes a summary directly into your ops dashboard. By the time someone asks the question, the answer’s already waiting.

No more playing middleware between your data and your insights.

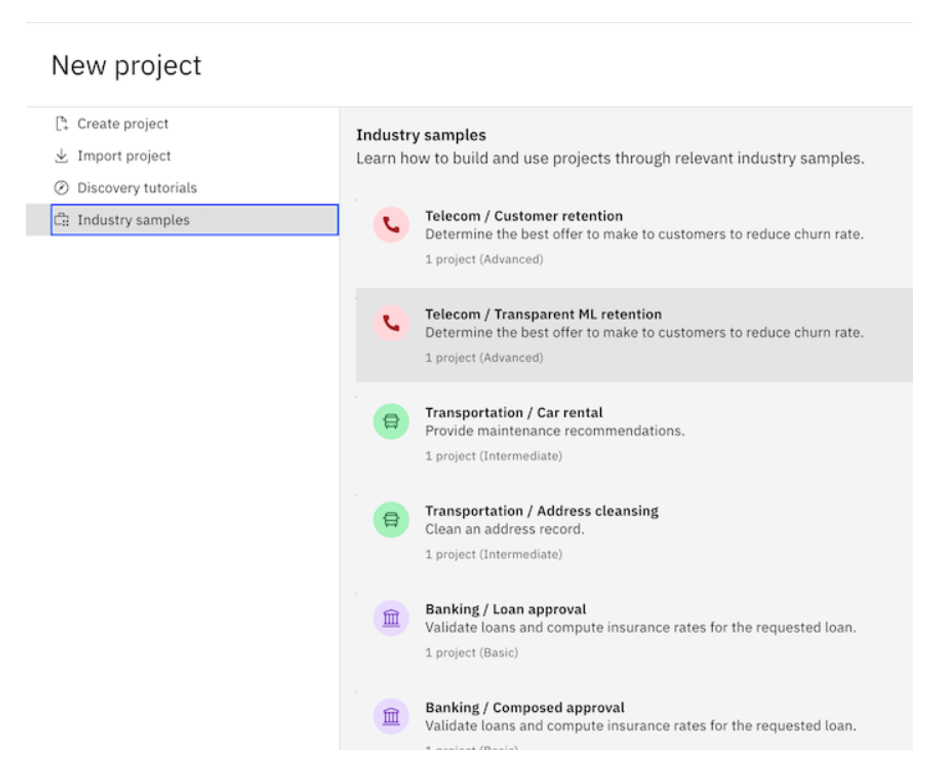

Feature 11: watsonx Orchestrate – Your AI Assistant’s Assistant

What It Is

Think of Orchestrate as the AI project manager that connects all the dots—tools, agents, systems, and workflows—without ever touching a command line. Built for business users (yes, even the ones who still say “AI makes me nervous”), watsonx Orchestrate lets you assemble full-on AI assistants using a clean, visual interface. You drag. You drop. You click “Run.” And magic happens.

Each assistant is powered by reusable “skills”—prebuilt or custom functions that do everything from sending emails and updating CRM entries to calling your watsonx.ai agents behind the scenes. It’s multi-agent orchestration without the DevOps headache. Need your assistant to summarize a contract, notify Legal and open a ServiceNow ticket? That’s a flow. You just built one.

Figure 11. Creating a new project on watsonx.orchestrate. (Source: Overview of IBM watsonx Orchestrate)

Why It Matters

Agentic AI is powerful, but what happens when your team needs five agents, four tools, three approvals and one secure audit trail? Orchestrate stitches it all together. It’s where AI meets real enterprise workflow: think HR onboarding, IT support triage, procurement approvals—automated, explainable and traceable.

This isn’t just “chat with AI.” It’s “deploy AI that gets work done.”

Example

Your HR team wants to onboard a new employee. An Orchestrate assistant collects manager inputs, schedules a welcome call, generates policy summaries using a Granite model and auto-populates fields in your Workday instance. Oh, and it pings IT to ship a laptop, all before lunch.

No dev sprint. No copy-paste chaos. Just … onboarding done.

Final Thoughts

watsonx is more than just a toolbox—it’s a cohesive AI platform designed for builders, thinkers and tinkerers. Beneath the headline features lies a world of powerful tools that reward curiosity and empower organizations to build real, production-ready AI without the typical chaos.

If you’ve been using watsonx just for notebooks or basic prompts, it’s time to explore what else is under the hood. Because once you start unlocking these lesser-known features, watsonx stops feeling like just another platform—and starts feeling like an unfair advantage.

Ready to Explore?

Spin up a project, open Prompt Lab, or publish your first fine-tuned model to your deployment space. The best way to discover these features is to play with them—and maybe, just maybe, uncover a few hidden gems of your own.