CICS Tools Identify Performance Issues

This article focuses on CICS performance. On the mainframe CICS is known as CICS Transaction Server (TS), but on other platforms the product is named TXSeries, and for special functions, CICS Transaction Gateway. All products are developed and supported by CICS development, and are designed to interact with each other via facilities such as CICS Intercommunication. While techniques, methodology, syntax, parameters and implementation specifics may vary, most functions, concepts and usage apply to all processing platforms. Thus, the term “CICS” in this article applies to both CICS TS and TXSeries unless otherwise stated.

It’s easy to determine if a performance issue exists in an IT system: Depress the Enter/Send key, click the mouse on a selection or tap an icon and wait. And wait some more. Maybe even more. Or watch a video start and stop, blank out and return, instead of playing smoothly. Or submit a report job and wait a couple days for it to appear on your desk or print out on your printer. Performance problems are very easy to detect. Determining what performance degradations exist and why they occur is another matter.

Identifying performance inefficiencies and related causes can be confounding, partly because there’s so much complexity, many components that could fail, detection facilities don’t exist or are turned off and there’s interdependence between components. For example, inadequate real storage can consume processor utilization by causing excessive paging, leading to a conclusion there’s insufficient processing capacity. Yet the real solution lies in adding real storage, which reduces CPU utilization and OS waits (due to time spent reading pages into the processor).

So determining the causes of performance degradation are often convoluted and obscure. Getting to the bottom of performance deficiency requires data describing various performance metrics and the ability to monitor systems in real-time. Further compounding matters is that, in most cases CICS is competing with other processing, so data collection needs to occur on both a system and CICS level. In the past, each product (e.g., CICS, MVS, IMS, etc.) recorded its own performance data, but over time SMF evolved to gather all this information and store it files or using the MVS System Logger, standardizing the process.

Because performance data is now recorded in a documented and architected way, tools and internal mechanisms can exploit the data to produce tabular reports, graphical representations and other useful depictions. Analysis techniques like trending, interval analysis, queuing theory, interval plotting and other data-massaging techniques can turn raw data into a treasure-trove of guidance on how to optimize processing resources. Products from IBM and other vendors provide this capability, and additionally provide extensive performance monitoring capabilities that allow an analyst to keep a finger on a system’s pulse. CICS has produced a wide variety of tools, functions and components that are consistent between CICS TS and TXSeries.

Mainframe Recording and Storing Performance Information

SMF isn’t a component of CICS; instead it’s a component of z/OS, but CICS does use SMF to write performance, audit and accounting records to SMF files named SYS1.MAN1 and SYS1.MAN2, or alternatively, MVS Logstreams. In most cases, SMF will already be set up and operational, but should that not be the case, here in a nutshell are the steps required to enable and activate it:

- Review installation materials and MVS System Management Facilities (SMF)

- Set up SMF datasets or MVS Logstreams

- Create SYS1.PARMLIB member SMFPRMxx with LSNAME parameters specifying record types of interest, using test suffix

- Determine dumping options and procedures

- Tailor SMF to meet your system requirements

- Test SMF via IPL and console commands

- Review SMF file contents for veracity

- Most z/OS components, features and supporting products (e.g., CICS TS, DB2, etc.) produce SMF records

- Each record has an 18 or 24 byte header containing record type, optional subtype, date and timestamp

- Record Types 00-127 are IBM products, Types 128-255 are user records

- CICS creates Type 110 records, Types 42 and 92 are dataset activity, Types 30, 70-79 are CPU, paging and I/O activity

- Review SMF data using RMF, Omegamon or other reporting and analysis tool

For more information, see MVS System Management Facilities (SMF).

AIX/UNIX/LINUX Recording and Storing Performance Information

Performance data is collected somewhat differently in AIX/UNIX/LINUX systems. Data collection is command driven and activated by default, taking the form of commands (e.g., vmstat, iostat, netstat, topas, ps, and ???). The most useful of these commands is vmstat.

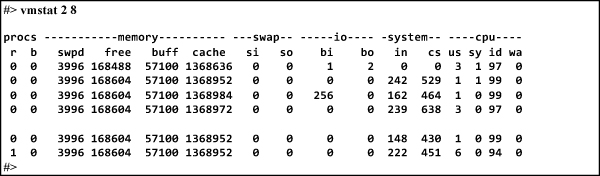

vmstat is a command that collects and displays performance data regarding processor utilization, real storage usage, paging (reflective of real/virtual storage activity), processes and interrupts (reflective of CPU activity), and I/O performance. Figure 1 shows the results of a vmstat command requesting reports every two seconds for eight iterations.

To direct the vmstat output to a file, enter the command: ‘vmstat 2 8 > myperformancedata.dat’

This command specifies that vmstat should be run every two seconds for eight iterations and the data is to be stored in a file named ‘myperformancedata.dat’; any valid file name can be used. It’s also possible to automate the vmstat command and create weekly “ generations” of performance data files via:

‘Nohup vmstat 10 60479 > myperformancedata.dat’ ‘mv myperformancedata.dat myperformancedata.dat.’date +%y-%m-%d’

Command explanation:

- The nohup command causes the command to be automatically executed even when no one’s logged on.

- The vmstat command specifies 10-second intervals and 60,480 iterations, which equals one week.

- If the date is March 15, 2017, the ‘mv’ command causes the data to be moved from myperformancedata.dat to myperformancedata.dat.date.2017-03-15. The percent sign (%) causes symbolic substitution of year-month-day.

For more information on performance data collection on Power Systems, see AIX Performance Commands.

Windows Recording and Storing Performance Information

Windows Performance Recorder (WPR) is a menu-driven, interactive performance recording tool that’s a component of the Windows Assessment and Development Kit (Windows ADK) and implements Event Tracing for Windows. It collects performance data in a wide variety of forms and stores it in a format that’s exploited by Windows Performance Analyzer (WPA), which creates graphs, reports and data tables pertinent to overall system performance, individual applications, processes and other Windows functions. Performance analysis and tuning can be accomplished on either a system level, or on individual processes such as TXSeries. WPR and Windows ADK are functionally rich and tailorable, and the Event Trace Logs created by WPR can be used to build performance history and reveal trends and pattern of activity.

For more information on performance data collection on Windows Systems, see

Choosing Performance Data to Record

Something to consider is the collection of performance data comes with a cost; overhead can be significant. You may not want to run all possible performance recording options at the same time. Of course, there are times that only full collection of data reveals true performance character, and it may be necessary to endure performance degradation for short periods of time over a limited number of days. On the other hand, if overall system performance is acceptable or at least bearable, a full data collection could provide a valuable performance snapshot and provide useful insights into whether CICS has performance issues or if the problem exists elsewhere. Here are some key performance components for which data should be collected:

- CPU utilization

- Real storage utilization

- Swap activity

- Paging activity

- Virtual storage utilization

- Jobs processed and turnaround

- I/O activity by request type (e.g., Read, Write, etc.)

- Data buffer utilization

- Channel utilization

- Cache performance

- Data activity by disk volume

First Things First

The prelude to CICS tuning is to establish an infrastructure that effectively collects performance data—not just CICS, but systemwide—which establishes the foundation for comprehensive performance analysis. You can’t tune a system until you have the data to reveal bottlenecks, where demand exceeds supply, where response times are too high and where capacity is inadequate for consumption. Performance data—abetted by quality performance reporting and analysis tools—shines the light on where to focus the effort, to modify parameters to reduce restrictions, to add hardware or software that provides significant improvement.

This sets the stage for a high level performance review that determines whether the performance problem is within CICS or without. Getting a feel for the full system’s performance before drilling down to CICS might save a lot of time and effort, and perhaps some system adjustments will improve overall system performance, hence CICS performance. The ultimate objective is to improve CICS performance, which might involve multiple CICS systems in multiple nodes within a computer network, but starts with a single node.