Scheduler Resource Allocation Domains or What the Heck is SRAD?

Technical expert Mark J. Ray explores the Scheduler Resource Allocation Domain and how it relates to performance structures in Power Systems servers running AIX

This month—and continuing in future articles—we’re going to take a look at one of the architectural and performance topics for Power Systems that confounds even seasoned administrators, and yet is critical to understand in any performance evaluation. This is the concept of a Scheduler Resource Allocation Domain, better known as SRAD (prounounced “ess-rad”) and how they form the bedrock of performance structures in Power Systems servers running AIX.

The first thing we have to do is define exactly what an SRAD is and what it does. Scheduler Resource Allocation Domain tells you next to nothing about what this construct does, let alone why it exists in Power Systems in the first place. So let’s see if we can remedy that situation:

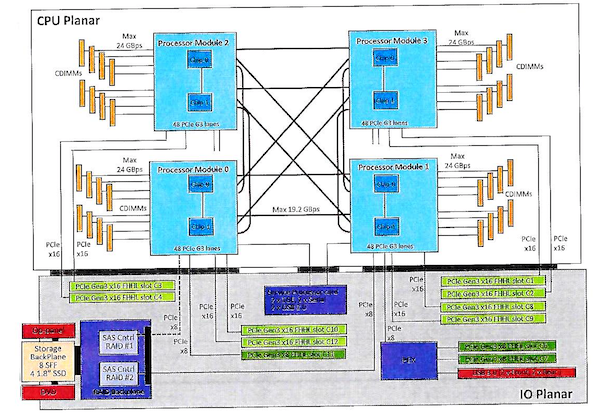

First, what I want you to look at the diagram above. What you’re looking at is a graphical representation of the CPU Planar of an E850. Recall that “Planar” is merely IBM-speak for “Board.” For this discussion, we’re most concerned with the larger turquoise squares (there are four of these), each containing two smaller blue rectangles. The four large squares are processor modules; each processor module in this E850 contains two processor chips. Each processor chip holds six CPUs—or “cores.”

A note about terminology: The popular name designation of the thing that carries out system processing in any computer is the “core.” In my articles, I tend to use the name designation of “CPU”, or Central Processing Unit. The reason I do this is because all of the AIX performance data collection programs that I use—utilities like vmstat, lparstat, CURT and the AIX Trace facility—report their data using the term CPU, but for the purposes of this article and to avoid confusion, I’m going to use “core.”

Remember that in a POWER8 system, each core contains eight hardware threads. (In the coming months, I’ll update this article for POWER9, which introduces some architectural differences over POWER8.) Depending on your application, your workload may use one, two, four, or all eight of these hardware threads at any given time. Typically, we find single thread usage in many database systems. Some middleware uses two threads and depending on how applications are written, the latter can use up to eight. You also see a bunch of other lines connecting each processor module, as well as connections between the processor modules and what are called Centaur Dual Inline Memory Modules (CDIMMs). These are hybrid DIMMs that IBM designed specifically for its Power Systems servers. The Centaur is actually a buffer chip that sits on the very dense CDIMM; these devices include Level 4 cache that enhances the performance of a P8 core/chip/processor.

We also see a number of heavy lines connecting processor modules; these are, appropriately enough, called interconnects, which allow workloads to move between processor modules at up to 19.2Gbps. You’ll also see a number of even more darkly-shaded rectangles at the bottom of the diagram; these are things like PCI slots and other controllers that won’t figure into this discussion. Here, we will be concerned exclusively with core/memory pairings on the CPU planar.

Take your time with the diagram and try to understand what it’s telling you. You’ll need this understanding for the rest of this article and most importantly, how performance figures in to the equation.

Back? Good. Now on to SRADs. Think of an SRAD as a tight coupling between cores and memory. Every recent generation Power system has them, not just E850s. As a further visual aid, let’s take a look at the SRAD configuration of a typical E850. We list our SRADs with the “lssrad -av” command. Doing this on a reference E850 yields this output you see in the image below.

REF1 SRAD MEM CPU

0

0 74150.31 0-23

1 29880.00 24-47

1

2 73946.00 48-71

3 30129.00 72-95

2

4 74118.99 96-119

5 30378.00 120-143

3

6 73454.00 144-171

7 29205.00 172-191

This E850 has 48 cores installed. At the top of the lssrad -av output, we see four columns: REF1, SRAD, MEM and CPU. Online documentation and blogs are always confusing about what these columns represent, so let’s see is we can clear that up. The column that usually causes the most confusion is the first one on the left: REF1 is sometimes referred to as a reference node in documentation and blogs, but all REFs are is Processor Modules. Refer back to the first diagram. Now, compare that with the output of an lssrad -av, (above), and you’ll begin to see the correlation. In this E850—and again, this is pretty much a standard configuration—we have four processor modules installed.

Deciphering SRAD

Next, we have the SRAD column itself. Remember that SRAD stands for Scheduler Resource Allocation Domain. Let’s see if we can make some sense of the cryptic moniker. The term Scheduler should immediately conjure up vision of the entity that allows workloads to run on cores. Resource is simply an entity like a core or memory that actually runs that workload. Allocation is how those resources are deployed in the system as well as their relations to one another. Domain is probably the most important term here: In this context it’s a tight coupling between cores and memory that act, for all intents and purposes, like a discrete system unto itself. Every core has memory that is close to it, or local to that core. This memory locality is critical to the overall performance of any Power Systems server.

The next column is headed with MEM, which stands for memory. This is an amount of memory that is local to the cores in an SRAD. The last column is CPU. This can also be a little confusing. In this case, a CPU is not a core. Here, one CPU represents a single hardware thread, not a whole core, which in an E850 holds eight threads. We can illustrate this with our lssrad -av output. This can be confusing, so bear with me. Notice that we have a total of 192 “CPUs” in this system (we start our numbering with CPU “0”). There’s no way an E850 can be configured with 192 cores, so what gives? We know that our reference E850 has the maximum number of cores (48) activated, and we also know that every POWER8 core is composed of eight hardware threads. But as this E850 was shipped from the factory, only four of those hardware threads were activated on each core. So knowing all 48 cores have been activated in SMT-4 mode, that’s four hardware threads times the number of active cores which equals the total number of active hardware threads in this system. In essence, a CPU equals a hardware thread, not a core.

Allocating SRADs

Our next step in understanding SRADs is how they are allocated in the first place. When a Power Systems server starts up, the hypervisor determines—or tries to determine—the best coupling of cores and memory, based on your machine’s configuration. It looks at the CPU Planar and sees X number of processor modules, Y processor chips, Z cores and a quantity of memory that is local to each. Note that I said “tries to determine” the best configuration. Any Power Systems server can have many different possible configurations of cores and memory. The hypervisor will look at what you’ve got and try to assign memory equally among the processor chips and cores.

Ideally, when you look at lssrad -av output, you should see more or less equal amounts of memory allocated to each SRAD. In reality, this is hardly ever the case. And it’s here that we encounter what is arguably the most significant performance issue with SRADs.

The Processor Chip/Core/Memory Imbalance

How many times has this happened to you: As an administrator, you run constant performance utilities like vmstat, iostat and netstat to gather the statistics necessary to insure the proper operation of your AIX systems. You see sustained system core utilization increase over time, to the point where you may be running at 80%, 90% or even higher usage. What do you do? Well, the first thing you do is call your application vendor to let them know the situation, right? And I will bet that many of you have received back the same answer: Add more cores. It’s rare that application or database vendors will undertake a code review and a rewrite to address a common performance problem.

Another wrinkle is that most of you likely operate in an environment where your production applications run your business and cannot be taken down for maintenance, at least not without a rigorous change-control process. You add more cores using DLPAR, or Dynamic LPAR, which allows you to add or subtract just about any system component on the fly and not take an outage, but performance doesn’t improve. In fact, after spending all that money on your shiny new resources, you find that your performance has actually decreased.

REF1 SRAD MEM CPU

0

0 74150.31 0-23

1 29880.00 24-47

1

2 73946.00 48-71

3 30129.00 72-95

2

4 74118.99 96-119

5 30378.00 120-143

3

6 11454.00 144-147 152-171

7 11205.00 148-151 172-191In our example E850, what happened was this: Remember how I said the hypervisor attempts to give you the best SRAD configuration based on the installed resources in your system? After a DLPAR of cores was done, an imbalance was created with the amount of memory that was allocated to each SRAD; the image above illustrates this example. In this figure, we have about 73Gb allocated to SRADs 0, 2 and 4 and about 30Gb given to SRADs 1, 3 and 5. SRADs 6 and 7. had a bit more than 11Gb. The machine had about 345Gb of memory activated. When the DLPAR operation was used to add another ten cores (or 40 hardware threads), the hypervisor did its best to allocate whatever free memory was left in the system to those cores. Because the core activations came out of processor chips 6 and 7, they had to make do with the memory that was already allocated to those SRADs. The problem is obvious.

Preventing SRAD Imbalance

Is there a way you could have prevented the SRAD imbalance without taking an outage? Well, you could have made the occurrence of the problem a lot less likely: You could have just added more memory to your system to begin with. In our reference E850, we had 345Gb of memory active. But we had another 94Gb inactive for a total system capacity of 448Gb. So let’s play this scenario out: What if we had DLPAR’d that additional 94Gb of memory into our system first, and thenDLPAR’d our additional cores? And if we could have shut the system down altogether and restarted it, would that have improved the SRAD balance even more? It’s likely that a lot (if not all) of that additional memory was “local” to processor chips 6 and 7. So by adding the memory first, we made sure we had enough allocated to the cores in those chips. Recall that IBM mandates that you to populate all of your memory slots in sequence and equally, rather than sticking all of your memory in some slots and not in others—this is a big reason why. Bottom line: Always develop a holistic approach towards solving any performance problem.

By the way: So far in this article, we’ve been looking at an E850 with dedicated cores; a system that runs in full partition mode and doesn’t share resources with other systems. But what about an LPAR in a shared-resource environment? In those cases, SRAD imbalances are trickier to deal with, but not insurmountable. When operating in a shared environment, the key word becomes “precedence”. Let’s take another E850, which shares its frame (and cores)among a few LPARs. (Let’s assume each LPAR has its own storage and networking devices.) Now, let’s rerun the DLPAR of cores scenario. Same problem, right? Now what do we do? Whenever I’m on a performance gig, one of the first things I do is ask the site owners to rate their systems and applications according to importance. And initially, the answer I get is always the same: “They’re all important.” So I make a nuisance out of myself and press the question until I get a different answer, an answer that definitively ranks the systems—and especially partitions in an LPAR’d environment—according to their importance to the site’s business. Why do I make a pest out of myself on this point? Because when you’re dealing with SRAD imbalance in a system with multiple LPARs, you try to correct it the same way: by restarting your LPAR. But that LPAR will only be able to re-allocate and re-balance the cores and memory that were originally allocated to it in its partition profile. Doesn’t sound like you’re going to be able to do a whole lot of re-balancing, does it? Well, maybe not.

Let’s think about our example E850 where we have our main application LPAR sharing its resources with two other logical partitions, let’s call them LPARs A, B and C, where A is our main app LPAR. And we’ve run into an SRAD imbalance. How to we get the Hypervisor to give LPAR A the best shot at balanced, well-performing SRADs? What we need to do is shut every partition down and bring them back up in an order that will allocate the best SRAD placement to LPAR A. So we shut everything down and restart them, making sure LPAR A is restarted first. The Hypervisor will give LPAR A the best SRAD placement and then basically just do its best with partitions B and C. And what if you have a dozen, or even a hundred LPARs in a frame? My best advice is to work with your site owners and hammer out the “List of Precedence”; without this list, both you and the hypervisor will have to settle for best-efforts.

Looking Towards Thread Migrations

So there you have SRADs: How they’re put together and their basic function in a Power Systems environment. In a future article, I’ll cover thread migrations. To prepare for that topic, what I’d like you to do is run a utility called Topas. This diagnostic aid ships with every AIX distribution. Invoke it from a root command prompt with the “-M” flag; your invocation will be “topas -M”. See the columns headed “Local”, “Near” and “Far”? It is these designations we will look at in the future; they are critical to Power/AIX system’s performance.

In the meantime, we conclude this article with this thought: Any computing system, regardless of whether it’s an IBM, HP, SUN/Oracle, or even a Wintel box has four major component groups, and they are cores, memory, storage and networking. Never think you can simply throw hardware at one of these groups and solve a performance problem without taking the other three into consideration. That may be the quick way – and it may indeed be the way your application vendor recommends—but as a performance practitioner, you know your systems best. You know how they’re put together and how they will respond to hardware changes. Make your case to management about the best way to deal with performance issues. They might not listen and reject things like failovers and outages. But then again, they might agree with you. And always remember that you are derelict in your duty if you don’t present all available options to the decision makers.