Of Dials and Switches: An Introduction to Tuning the AIX Kernel

The kernel is the heart of any operating system. A major function of this core code is to provide access to the hardware on which it runs.

Over the past couple of years, I’ve frequently written about tuning attributes of the AIX kernel. Be it for memory, CPU, networking or storage, I’ve explained how to manipulate certain pieces of kernel code for specific purposes. In addition, I’ve shown you how to exploit raw and scaled CPU throughput modes, asynchronous I/O in storage, and many different network settings.

Most people who email me mention that this advice has positively impacted their environments. While I’m always grateful for these responses, in this case you provided some inspiration as well. Because a lot of you also asked about all the kernel tuning options available in AIX, I realized that I should delve deeper into this topic in a new series of articles. So let’s get to it.

The Evolution of the Kernel

AIX, Linux and most UNIX variants have a set of kernel tuning options that administrators can manipulate. To varying degrees, these options, these dials and switches, let you adjust attributes of the particular kernel you’re running in your environment.

The kernel is the heart of any operating system. A major function of this core code is to provide access to the hardware on which it runs. How that access is granted―and more importantly, the degree to which it’s granted―depends entirely on code routines that manipulate the four performance resource groups: CPU, memory, networking and storage.

Thirty-some years ago―an era I refer to as the bad old days of UNIX―kernel code was minimal in size (1-2 MB) and very limited in capability. It provided access to disk drives, network adapters and monitors―and that was about it. Reads and writes to disk occurred at one speed: slow. Data flowed over the network at 300 baud if you were lucky. Monitors could display only monochrome alpha-numeric characters. Tuning options as such were not available in the UNIX kernel. Changing the speed at which data flowed meant rewriting and recompiling the code.

As computing systems evolved, it became clear that more sophisticated hardware required the support of a more sophisticated kernel. So, OS kernels got bigger. With AIX, the tipping point, in my opinion, came in the late 1990s. By then admins recognized that IBM was shipping a set of discrete dials and switches that allowed us to adjust various performance aspects of kernel code. Prior to LPARs, there were wholly physical machines called SP/2s. These systems included a switch that routed information among its various nodes (also physical), functioning much as network switches and routers do today.

Db2 and Oracle were the big databases that ran on SP/2. There was also middleware like MQ and backup software like ADSM (which eventually became Tivoli Storage Manager). The presence of these and other software packages meant that various parameters in the SP/2 switch had to be tuned beyond their default settings to realize the best performance. Concurrently, IBM started shipping AIX with a set of tunables that focused mostly on the network.

Of course, hardware power and performance have taken quantum leaps since those days. As a result, the capability to tune ever more discrete pieces of the AIX kernel code has taken on significantly greater importance.

As of the latest AIX version (7200-02-02-1810), there are nearly 300 discrete tunables in the four performance groups. Once the range of possible values are factored in, you’re looking at literally thousands of tuning options. Keeping track of―and utilizing―all of these possible combinations is far more than a full-time job: It’s a life’s work. I know, because I’ve spent more than 20 years doing this.

Fortunately, internal AIX documentation makes this challenge a bit more manageable. The tuning options are segregated into individual units with their own man page style help guides. Further, each performance resource group lets you display its tunables in three ways: as a terse list, in spreadsheet format, or in verbose list format. Newbie admins in particular should always opt for the verbose list; it provides the best at-a-glance information about every AIX kernel tunable.

A Closer Look at Key Tunables

IBM groups kernel tunables into numerous categories. There are scheduler and memory load control tunables, VMM tunables, synchronous and asynchronous I/O tunables, disk and disk adapter tunables, and interprocess communication tunables. That takes in a broad swath, and obviously, I can’t cover everything. Some areas I won’t deal with here include network file systems options (NFSO) and Reliability, Availability and Serviceability options (RASO), since I’ve only rarely seen these tuned in the sites I’ve evaluated.

I also won’t get into the Logical Volume Manager (LVM), adapter or inter-process tunables such as the LVMO settings, and IPCS environmental variables. This stuff is pretty deep, and it would take one or multiple articles to adequately explain. Likewise, I won’t deal with the Active System Optimizer options (asoo). This too would require its own article.

Instead, I’ll focus on the specific sets of CPU, memory, networking and storage tunables that I’m asked about most frequently in my performance practice. These tunable sets are the SCHEDOs (or scheduling options) for CPU, the virtual memory options (VMO) for memory, the input and output options (IOO) for storage and the networking options (NO).

The AIX scheduler is the master control program, so to speak, for everything that happens in an AIX system. Its job is to grant CPU execution time for every thread in the box, with all the various functions those threads can perform. As in the early days of computing, these functions can be broadly classed under the umbrella of hardware access; but nowadays, the capability to finely tune the kernel is truly amazing. Let’s skip the terse and spreadsheet listing of our schedo tunables and go right for the display where we can actually learn something. Here’s how to display your schedos in verbose list format and write that list to a file:

schedo -FL > schedo_list

The schedo -FL output is displayed in figure 1. This is a neat and concise display of every CPU tunable in the latest AIX release. Dashed lines separate each tunable, and to the right of the tunable names, you’ll find a series of mainly numeric codes. Descriptive words like “boolean,” “dispatches” and “spins” follow the codes. The last column of output generally consists of a single letter (typically a capital letter, though there are some lower-case exceptions); for the schedos, D and B are most common.

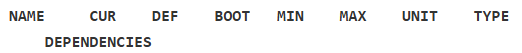

At the top of the file, you’ll see a line with what appears to be abbreviations. It looks something like this:

This line describes the characteristics of every CPU tuning option that follows. From left, these abbreviations head columns and stand for the tunable name, its current and default value, and its boot time (BOOT) value. The next two abbreviations, min and max, represent the minimum and maximum values allowed for the tunable. Next is the unit value (UNIT), which can be boolean, dispatches, spins, microseconds or numerous other values. Then we have the single-letter designations in the type column. Finally, we have the DEPENDENCIES heading. Dependencies seldom occur, but when they do, you’ll see a list of CPU tunables that must be considered and/or have specific values to tune the scheduling option you’re looking at. I’ll get into this later in the series, but it’s not as confusing as it sounds.

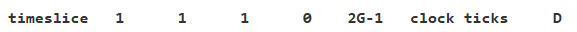

Now page down through the file until you come to the line that starts with “timeslice.” We’ll use that tunable as an example. With this information as a template, you’ll be able to make sense of what this and the other scheduling options are trying to tell you. Here’s the timeslice line:

Timeslice is the dial that controls the amount of time a thread is allowed to run on a CPU. A timeslice duration in AIX is 10 milliseconds (ms). Each 10ms timeslice is composed of a number of CPU clock ticks, which vary in length according to the type of IBM Power Systems server you’re running. For example, a P850 typically runs at 3,026 MHz, which works out to more than 3 billion clock ticks (or cycles). See this for more about the timeslice parameter.

Tuning your system’s CPU timeslice is necessary to: 1) eliminate context switching, and 2) allow threads more running time (or occasionally, less time) on a given CPU. The timeslice tunable has other uses, but those two are the most common. From left then, the timeslice line lists the current timeslice value, its default value and its boot value, which ranges from 0 to about 2 billion. In the far right column, we see the cap D, which means the tunable is dynamic and can be freely changed. Timeslice has no dependencies.

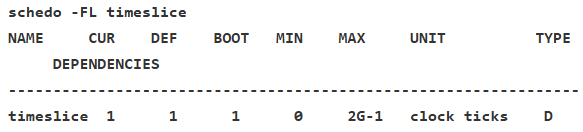

That’s a quick overview of the timeslice value. The value-description line can also be incorporated with every tunable you list. Rather than issue a schedo -FL, simply append the name of the tunable to the command. In the case of timeslice, your output will look like this:

So take some time to familiarize yourself with every schedo tunable, both in master-list format (schedo -FL) and individually (schedo -FL). You’ll be surprised at how quickly you become familiar with what all the tunables do. Incidentally, the same display method can be applied to the memory, networking and storage tuning parameters.

Looking Ahead

In the second installment in this series, I’ll tell you how to get more detailed information about all the tunables, and how to change a tunable’s value. We’ll also get philosophical about the great restricted/unrestricted debate. Oh, you’ve not heard of that? Then you’ll definitely want to, ahem, stay tuned.