IBM Unleashes the z17

The public got its first look at the z17 in April, when it was introduced with a heavy focus on the new hardware's AI capabilities.

IBM is doubling down on AI as it rolls out its next mainframe, set for general availability June 18.

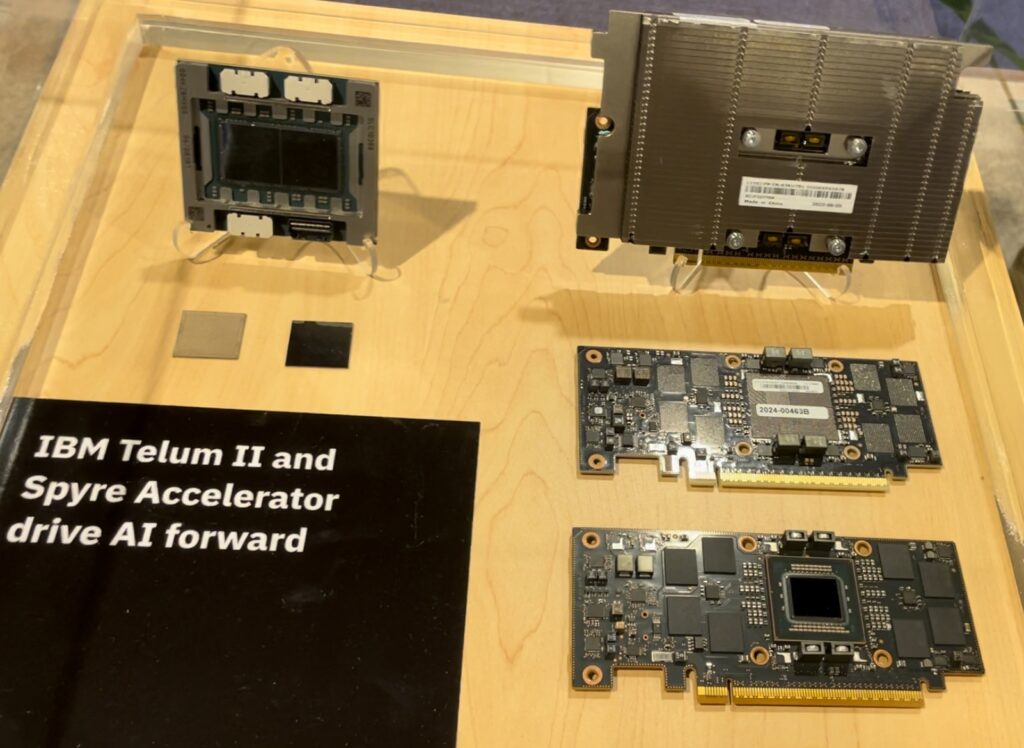

IBM’s z16 brought on-chip AI processing to the platform for the first time with the introduction of the Telum chip in 2022. Its successor, the z17, features two AI-focused chips—the Telum II and the Spyre Accelerator.

The new mainframe was introduced in April as part of a special Z Day virtual event. “This is a full-stack launch across IBM Z and IBM, so we’re really thrilled for all of you to learn about it, and most especially for our clients to get their hands on it starting next month,” Tina Tarquinio, chief product officer for IBM Z and LinuxOne, said at an April 1 press briefing ahead of the public announcement.

In rolling out its new mainframe, IBM is boasting of the z17’s ability to tackle AI workloads and help organizations keep their sensitive data on-premises while performing AI inferences in real time, a capability that bolsters use cases such as fraud detection. “These [chips] will help us run AI inferencing at scale, scoring every transaction with larger, more accurate models at every transaction with no impact to SLAs [service level agreements] or performance,” Tarquinio said.

Since the z16 was introduced in 2022, interest in AI has exploded with the proliferation of generative AI (GenAI), a label that includes large language models (LLMs) like ChatGPT. The z16’s original Telum processor was geared to handle predictive, or traditional, AI workloads. And while the Telum II retains that focus, the Spyre Accelerator works at the other end of the AI spectrum, specializing in GenAI models. With the popularization of GenAI, “we’ve seen models grow by a factor over a hundred, and this leads to higher requirements for AI compute on the infrastructure,” said Elpida Tzortzatos, IBM Fellow and CTO for AI on IBM Z.

The New Trend: Multi-Model AI

The combination of the Telum II and Spyre chips will enhance organizations’ capabilities to leverage multi-model AI, “a new trend that we see rising in the market and with our clients,” Tzortzatos said. “…Clients now are leveraging both the strengths of predictive AI models along with large language models and coder large language models.”

Predictive AI models, she explained, are ideal for processing and detecting patterns in structured and numerical data, while GenAI models excel at understanding unstructured data such as natural language. “Being able to derive insights from both structured and unstructured data is important in terms of our clients really designing more robust, more efficient AI systems that deliver more accurate results,” Tzortzatos said.

While GenAI has secured much of the tech community’s mindshare in recent years, there will continue to be a place for both kinds of AI, according to Tzortzatos. “Predictive AI models will still continue to be the best fit models, if you will, for implementing use cases such as demand forecasting and re-scoring and anti-money-laundering and fraud detection,” she said. On the other hand, GenAI “opens up the apertures for a whole set of new use cases,” she added. Those use cases range from summarizing documents to extracting key insights from unstructured data.

Data Security

One challenge organizations face in deploying AI is protecting their data. This is where there’s an advantage in keeping sensitive data on-premises by processing it on the mainframe instead of sending it off-site. “Clients need to ensure that they can deploy AI in secure environments, or they risk exposing their data,” Tzortzatos said. “AI introduces several new and amplified security risks. For example, users can craft inputs to bypass restrictions and link sensitive data or make the AI models behave unexpectedly.”

Tarquinio noted that in designing the z17, IBM spent over 2,000 hours engaging with mainframe users across 80 companies. “What we heard from client after client after client,” Tzortzatos said, “was that they wanted to be able to run these GenAI use cases on-prem in a secure environment where they had control over their data, but also the model IP [intellectual property].” On top of that, an IBM Institute for Business Value study, released in October 2024, found that almost 80% of the 2,500 IT executives polled see AI on the mainframe as “absolutely essential,” Tarquinio said.

Quantum Safety

Another security concern on the horizon relates to quantum computing, and as those threats increase in number, so will the rules governing how data is handled. “The threats and the regulatory requirements are really increasing every day for our clients. And so we really leveraged the strength of our platform to help them prepare for those threats and those audits,” Tarquinio said.

Quantum-safe encryption came to the mainframe with the z16, and the z17 introduces tools meant to ease compliance responsibilities related to quantum technology. “We’re introducing new tools to help our clients get started even faster on that quantum-safe journey,” Tarquinio said.

Real-Time Processing Speeds

In addition to addressing concerns over data security, keeping AI workloads on the mainframe also improves processing speeds, something especially important for tasks such as real-time fraud detection. In speaking with clients around the globe, IBM learned that “they wanted to be able to embed AI in each transaction but without slowing down those transactions,” Tzortzatos said. IBM’s new hardware enables response times of single-digit milliseconds, “so our clients can really score with AI thousands of transactions per second,” she added.

“This system could do up to 450 billion AI inferences in one day” at “eight nines” (99.999999%) of availability, Tarquinio said. And the Telum II chip alone increases AI throughput over the z16 by a factor of seven and a half, she added

The Telum II processor will have eight cores, while the PCIE-attached Spyre Accelerator will have up to 32 cores, Tarquinio noted, adding that the initial release of the z17 will support up to 48 Spyre cards on one machine.

“These two [chips] will really help cover a wide range of use cases and also have room to grow, because as you surely know, the market is changing all the time in this space. And we want to really give our clients the infrastructure they need to pivot and be agile with it,” Tarquinio said.

Energy Efficiency

As AI grows, so does its appetite for power, a major factor for organizations to consider when implementing the technology. “Not only do they have to worry about having the compute that they need to deploy those AI use cases, they also have to deal with power consumption and energy cost challenges,” Tzortzatos said.

With that in mind, IBM says its new AI accelerators are relatively efficient. “Our Telum II accelerator adds about 10 to 12 watts on that silicon, and our PCIE-attached [Spyre] accelerator has an average power consumption of 75 watts. And both of them are very power-efficient when you compare that to other types of accelerators out there,” Tzortzatos said. Added Tarquinio: “Those other types of accelerators could [require] three, four, five times more energy usage than the Spyre.”

Hard Acts to Follow

With the z17, IBM is hoping to build on three generations of platform growth as the mainframe continues to run over 70% of the world’s transactions overall, and 90% of credit card transactions. Since the z14, each iteration has outperformed its predecessor in terms of both revenue and installed capacity, according to Tarquinio.

By providing infrastructure to meet the growing demands of AI, IBM believes that momentum will carry forward. “If you think of data as the fuel for AI, then infrastructure is the engine that allows organizations to drive their AI journeys to success,” Tzortzatos said.