Best Practices for AIX in a SAN Environment: Part 2, I/O Configurations

In my last article I explained the best practices for AIX in a SAN environment from a SAN perspective. I explained some best practices for SAN topologies, multipathing, FC-HBA configurations, among other recommendations. In this article, I’ll explain the best practices for the I/O configuration from the AIX perspective—that is, recommendations for data layout, filesystems, flash caches configuration and documentation.

General Best Practices

As a reminder, it’s a best practice is to avoid making changes if the performance is OK, because what could help in one situation may create an issue in other. Another best practice for AIX is to upgrade to the latest stable versions for AIX and VIOS. The IBM development team will always try to improve the performance by changing the default values of the configuration parameters. Therefore, I/O parameters such as queue depth, maximum transfer size will be improved with each release of the OS.

Are All Disk Arrays Created Equal?

You should have a good grasp of SAN and AIX capabilities, because in best practices there is no single perfect answer. Therefore, it’s necessary to gather big schematics for the applications and SAN environments and understand them, because all disk arrays and applications are not created equal. Disk technologies differ from speed, spindle count, latency and Iops. Consequently, some applications or LPARs may use traditional disk with platters and their performance is OK, but some other applications may need premium disks such as NVM drives or flash disks because their I/O requirements are higher. The idea is to understand your SAN and application environment. This includes understanding your performance metrics to gather a performance baseline and thus identify problems in your AIX hosts.

Best Practices to Improve Disk Performance in AIX

There are some general best practices to improve performance in AIX. One is to reduce the numbers of I/Os by using bigger caches at the application, filesystem, or storage system level. On the other hand, you can improve the average service times by identifying a proper LUN size for your application and your storage equipment, or by using a better data layout, also by using AIX flash cache or LUN devices backed by SSD disks or by reducing the overhead to handle I/Os by using raw devices or using filesystems with direct I/O access. I’ll explain these techniques in the following paragraphs.

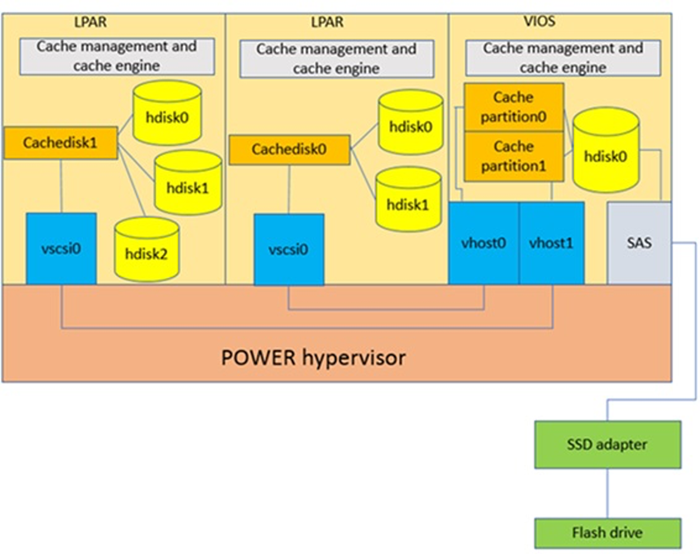

AIX Flash Cache With vSCSI Technology

An easy way to improve disk performance in AIX is by using AIX flash cache. This feature allows you to cache frequently accessed data for a significant improvement in performance. To use this feature, you’ll need a server-attached flash device, or a LUN device backed by SSD disk. Then by assigning the flash device to the VIOS you can create a cache pool and then create and assign a cache partition to each LPAR you want to improve. From then on, all I/O read activity inside the LPAR will be improved and this is transparent to the applications running in the LPAR. Figure 1 summarizes the AIX flash cache architecture:

These are the general steps to use AIX flash cache:

1. Create a cache pool on VIOS

2. Create a cache partition on the cache pool (on VIOS)

3. Assign cache partition to a vSCSI adapter present on VIOS

4. Assign the cache disk to the storage disk

5. Start caching on LPARs

Data Layout Best Practices

The data layout affects I/O performance more than any tunable I/O parameter. The best practice for data layout is to evenly balance IOs across all physical disks to avoid hot spots. It does not matter if we are using LUN devices and the LUNs are backed by several physical disk drives. From the AIX standpoints, hot spots are a bad thing, because each hdisk devices represent threads and queue depths and these constructs can be exhausted in the AIX and the storage equipment. The best practice for data layout depends on the type of I/O operation, which can be random or sequential..

Random I/O Best Practices

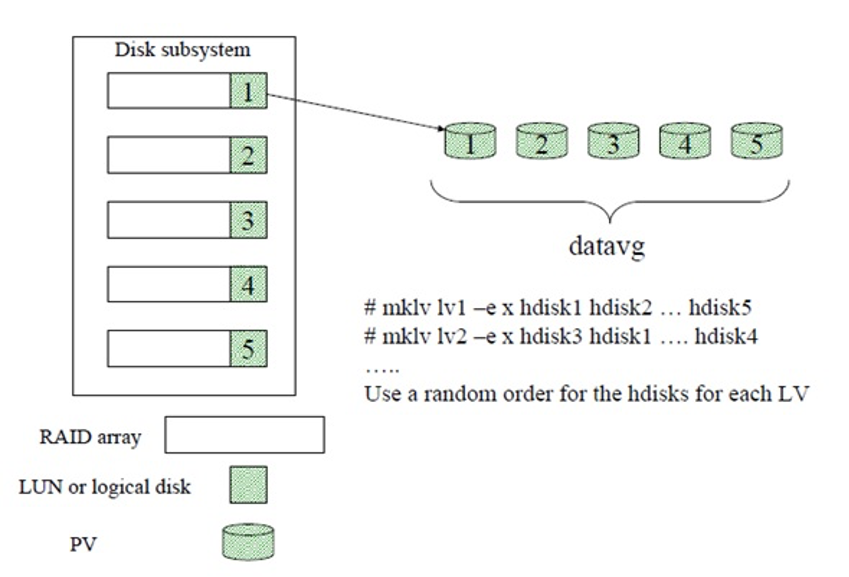

The best practice for random I/O is to use LUN devices that provide high amount of IOPS and low latency and avoid hot spots in AIX by using PP striping or LV striping. The recommendation is to use PP striping because it’s easier to maintain and allows you to spread I/Os evenly across all physical disks. Follow these general suggestions for PP striping:

· Create RAID arrays of equal size and RAID level

· Create VGs with one LUN from every array

· Spread all LVs across all PVs in the VG

· Use a random order for the hdisks for each LV

To use the PP striping, use the mklv -e x or chlv -e x commands. Figure 2 illustrates how to work PP striping:

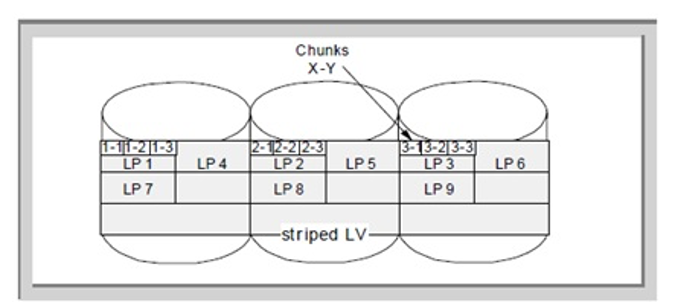

On the other hand, LV striping sometimes provides better performance in a random I/O scenario, but is more difficult to add physical volumes to the LV and rebalance the I/O. Therefore, the suggestion is to use PP striping unless you have a good reason for LV striping. Moreover, generally, storage vendors do not recommend LV striping, because their equipment is already doing that job for you and may increase the storage controller CPU consumption. In case you use LV striping, keep in mind the following best practices:

· Create a dedicated volume group to striped logical volumes

· Use an LV strip size larger than or equal to the stripe size on the storage side, to ensure full stripe write.

Usually, the LV strip size should be larger than 1 MB.

To create a striped logical volume, use mklv -S stripSize -C stripewidth. Figure 3 illustrates the architecture behind a striped logical volume:

Sequential I/O Best Practices

The best practice for sequential I/O is to use PP striping and guarantee enough throughput. Therefore, you can use RAID 5 or 6 arrays with traditional disk drives, because this workload uses long reads and writes operations. Here, the bandwidth is more relevant than latency. Finally, use big or scalable VGs, because their limits are higher and support no LVCB on LV which is only important on raw LVs. In addition, use RAID in preference to LVM mirroring, since reduce IOS as there are not additional writes for Mirror write consistency.

Filesystem Best Practices

A rule of thumb for filesystem is to use JFS2, since it provides enhanced features such as online filesystem shrinking and better i-node management. In addition, it’s suggested to create JFS2 filesystems with the INLINE log option. This option places the log device in the logical volume with the JFS2 file system, thus avoiding hot spots related to LOG Logical Volumes. To create a file system with an INLINE log, execute:

crfs -a logname=INLINERemember, this option is only available at the filesystem creation time. Otherwise, the file system must be removed and recreated.

In addition, applications generally use conventional I/O. That is, data transferred to/from application buffer passes through the filesystem cache and then to disk and the filesystem take cares of inode locking. However, applications, such as databases, that ensure their data integrity (inode locking) and has its own cache buffer, the conventional I/O adds overheads and impacts performance, because these services are not necessary.

Therefore, in these situations is suggested to use the filesystems with concurrent I/O (CIO) which bypasses the file system cache layer (VMM), and exchanges data directly with the disk. An application that already has its own cache buffer and locking mechanism is likely to benefit from CIO. The following command enables CIO on the filesystem:

chfs -a options=rw,cio <file system>Using CIO provides raw logical performance with file system benefits. Keep in mind that currently, some applications now make CIO calls directly without requiring CIO mounts.

The last best practice for filesystems is use the noatime option. This option disables File last access time (atime) updates for JFS2. The kernel updates this timestamp every time a file is accessed and generally the applications do not check this timestamp. Therefore, disable the atime with noatime. Some documents claim up to 35% improvement with CIO and noatime mount. The following command enables noatime:

mount –a rw,noatime /myfilesystemWord of caution: Check with the application vendor if CIO and noatime are supported.

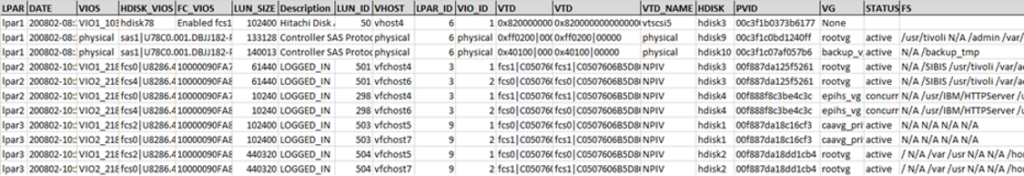

Document the Environment

Documentation is important in any IT environment. I like to automate the gathering of the storage and LPARs configuration. For example, I like to create scripts to generate spreadsheet which indicates SAN, PowerVM/VIOS, LVM configuration for each LPAR. This will help you to troubleshoot and plan better your SAN and AIX environment. Figure 4 illustrates one of these reports:

Final Recommendations

Explaining all of the best practices available for AIX in a SAN environment is beyond two articles. However, there are routines which are always best practices: read documentation, have continuing education, have a lab/test system to play with, document your environment, keep firmware and S.O current, baseline and monitor SAN performance, among others which are worth practicing.